How accelerated has been the evolution of Generative AI technologies! People are impressed by Multi-modal LLMs, that can understand and generate text, images, videos and audio using a single end-to-end model.

In this post, I demonstrate how to use a great recent multimodal LLM — Gemini 1.5 Pro — for the se case of generating a blog post solely from photos and videos taken on a trip. In the end, I also talk briefly about some popular multi-modal LLM architectures and public models.

Photo Storytelling v2

I developed and open-sourced the Photo Storytelling project by Nov. 2023, which implements a pipeline for blog post generation using Google APIs like Imagen for image captioning, Google Maps for geolocation and Palm 2 for writing, and used techniques like few-shot prompting for better results.

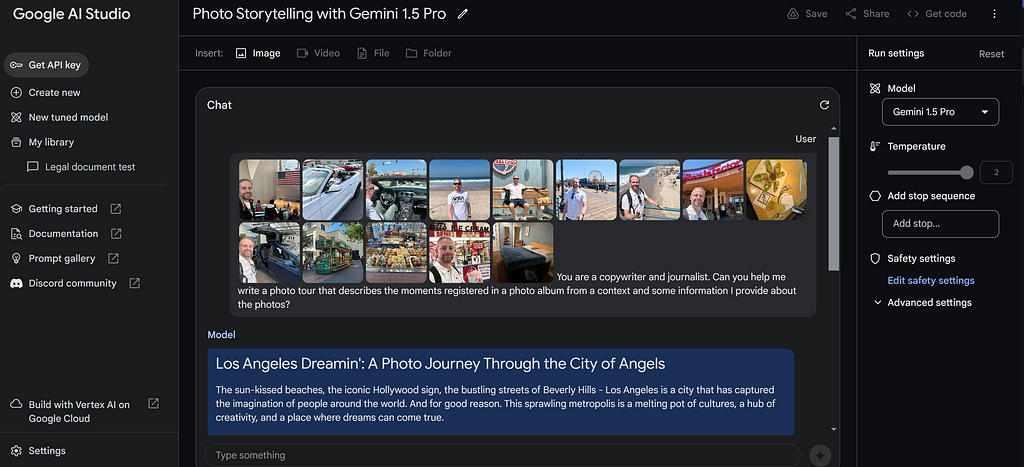

I decided to try the multi-modal capabilities of the new Gemini 1.5 Pro and simplify the pipeline I had in the previous version of Photo Storytelling. I didn’t need to code for this experiment with Gemini, by making usage of Google AI Studio.

Within Google AI Studio web app, you can click at “Create new” on left panel, select “Gemini 1.5 Pro” model on the right panel and start prompting.

I use here a very simple prompt, that doesn’t mention anything about the location and places I visited:

You are a copywriter and journalist. Can you help me write a photo tour that describes the moments registered in a photo album from a context and some information I provide about the photos?

I uploaded to the prompt the same pictures from my trip to Los Angeles used in my previous blog post based on Palm 2 API, as shown in the figure at the beginning of the post.

Here is the generated blog post:

Los Angeles Dreamin’: A Photo Journey Through the City of Angels

The sun-kissed beaches, the iconic Hollywood sign, and the bustling streets of Beverly Hills — Los Angeles is a city that has captured the imagination of people around the world. And for good reason. This sprawling metropolis is a melting pot of cultures, a hub of creativity, and a place where dreams can come true.

This photo album takes us on a journey through some of the most iconic sights and experiences that Los Angeles has to offer. From the moment we touch down at LAX and are greeted by the American flag, we know we’re in for something special.

We pick up our rental car, a sleek white Mustang convertible, and hit the open road. The wind whips through our hair as we cruise down Sunset Boulevard, taking in the sights and sounds of this vibrant city. We stop for a photo op at the Hollywood sign, a symbol of the entertainment industry and the dreams that it represents.

Next, we head to Santa Monica Pier, a classic California beach destination. We soak up the sun, enjoy the carnival atmosphere, and indulge in some delicious ice cream. We even take a ride on the Ferris wheel, getting a bird’s-eye view of the coastline.

Of course, no trip to Los Angeles would be complete without a visit to the beach. We spend an afternoon at Venice Beach, known for its eclectic mix of people and its bohemian vibe. We watch street performers, browse the shops, and take a dip in the ocean.

We also make sure to visit some of the city’s famous restaurants. We have lunch at the Bubba Gump Shrimp Co., a restaurant inspired by the movie Forrest Gump. And we enjoy a delicious dinner at The Cheesecake Factory, known for its extensive menu and its decadent desserts.

We even have the chance to visit a Tesla dealership and check out the latest electric cars. We’re impressed by the technology and the sleek design of these vehicles.

Throughout our journey, we capture the moments with our camera, creating a lasting record of our Los Angeles adventure. These photos are more than just snapshots — they’re a reminder of the dreams that we chased and the memories that we made in this magical city.

So join us as we flip through the pages of this photo album and relive the magic of Los Angeles.

Surely more poetic writing than I would be capable to do! It just included a minor misinformation, by including a landmark not registered in my photos: Hollywood sign.

I then tested some reasoning abilities by leveraging the Gemini knowledge about L.A., contrasting with the photos I took, by asking:

Which landmarks in Los Angeles I did not visit according to the photos I took?

The answer:

Based on the information you provided, it appears you did not visit the following landmarks in Los Angeles: Griffith Observatory, Getty Center, Runyon Canyon Park, TCL Chinese Theatre, Walk of Fame, Universal Studios Hollywood, Disneyland.

Of course, there are many other landmarks and attractions in Los Angeles, but these are some of the most popular ones that tourists typically visit.

It is correct! And I got tips for next trip!

Multi-modal reasoning

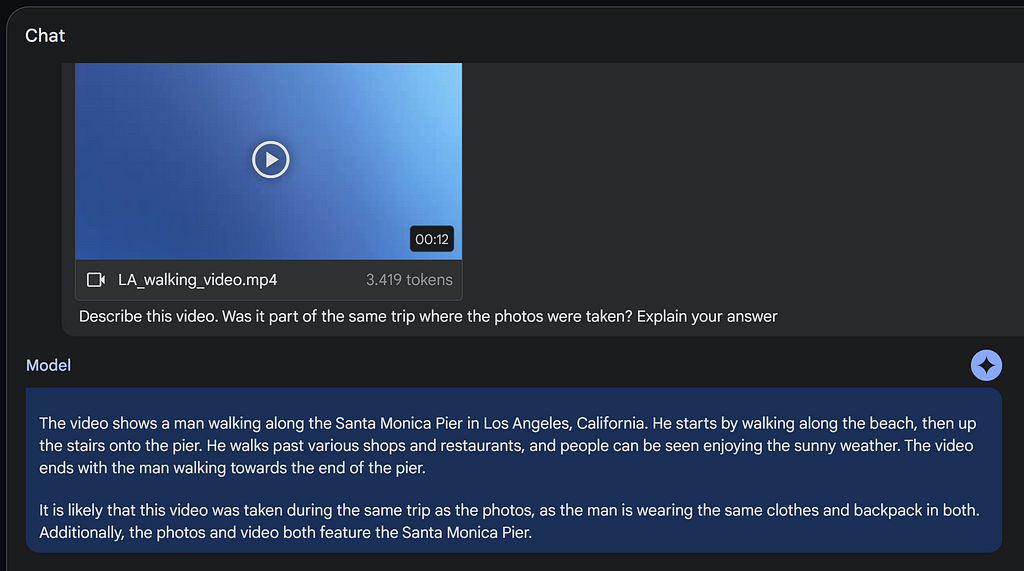

To test the video understanding capability of Gemini 1.5 Pro, I uploaded this movie I recorded at Santa Monica pier.

Then, I instructed Gemini to describe it, and asked whether it was shot on the same trip when the photos were taken. I requested an explanation for its answer, a prompting technique known as chain-of-thought (CoT).

It was able to identify that the man in the movie (me) was wearing the same clothes and backpack as in the photos, both taken in the same place (Santa Monica pier) which makes it correctly infer that the movie was shot in the same trip as the photos! Nothing but impressive!

Multi-modal architectures

Google does not disclose details of Gemini architecture. But some multi-modal architectures shared by research community give us some intuitions on how it might work.

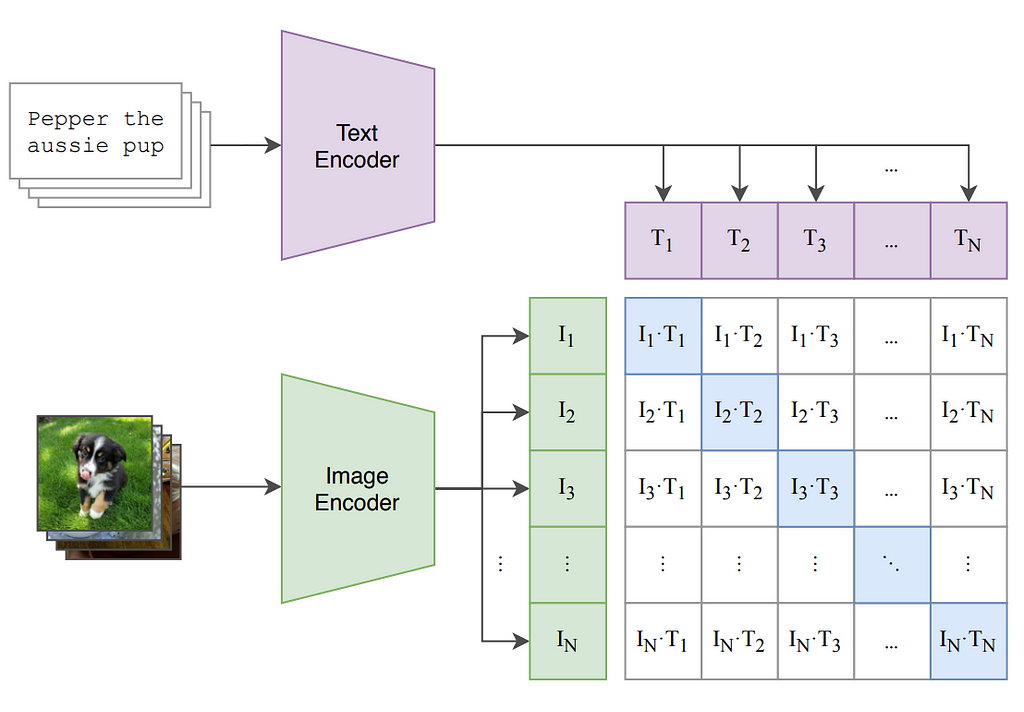

The CLIP architecture, introduced in 2021, uses contrastive learning between images with textual representations, with some distance function like cosine similarity to align the embedding spaces.

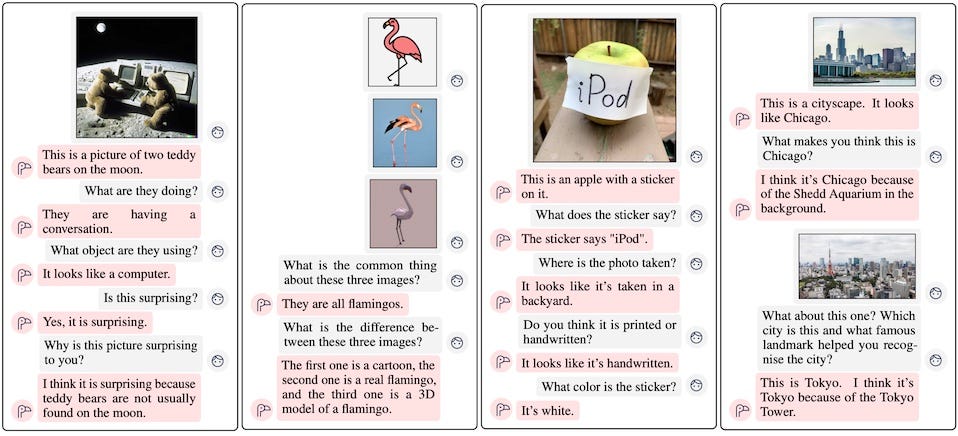

Flamingo uses a vision encoder pre-trained using CLIP and a Chinchilla pre-trained language model to represent the text. It introduces some special components — the Perceiver Resampler and a special Gated cross-attention — to combine those interleaved multi-modal representations, and is trained to predict next tokens. Flamingo can perform visual question answering or conversations around that content.

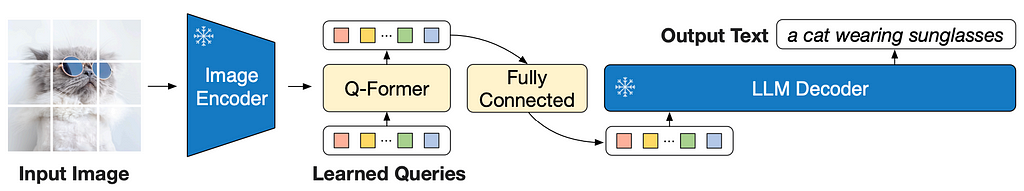

BLIP-2 also uses pre-trained image and LLM encoders, connected by a Q-Former component. The model is trained for multiple tasks: matching images and text representations with both constrastive learning (like CLIP) and with binary classification task. It is also trained on images caption generation.

You can check HuggingFace for a catalog of public pre-trained multi-modal LLMs released since 2023 (LLaVa is a popular one). You can also learn how to train multi-modal LLMs with NVIDIA Nemo here.

I hope this post helps to materialize the potential of multi-modal LLMs and to get some intuition on how they work under-the-hood.

What is your use case for multi-modal LLMs?

[ML Story]Multi-modal LLMs made easy: photo & video reasoning with Gemini 1.5 Pro was originally published in Google Developer Experts on Medium, where people are continuing the conversation by highlighting and responding to this story.

![[ml-story]multi-modal-llms-made-easy:-photo-&-video-reasoning-with-gemini-1.5-pro](https://prodsens.live/wp-content/uploads/2024/04/22089-ml-storymulti-modal-llms-made-easy-photo-video-reasoning-with-gemini-1-5-pro-550x251.png)

![how-a-successful-website-migration-led-to-a-20%-increase-in-keyword-rankings-[free-template]](https://prodsens.live/wp-content/uploads/2024/04/22087-how-a-successful-website-migration-led-to-a-20-increase-in-keyword-rankings-free-template-110x110.png)