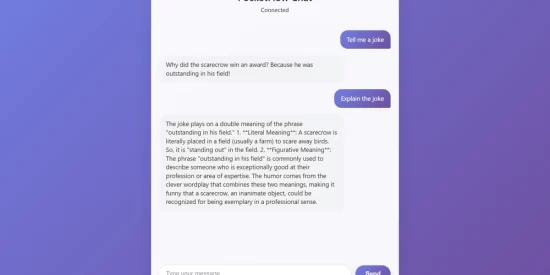

Ever watched ChatGPT type back to you word by word, like it’s actually thinking out loud? That’s streaming AI in action, and it makes web apps feel incredibly alive! Today, we’re building exactly that: a real-time AI chatbot web app where responses flow in instantly. No more staring at loading spinners! We’ll use FastAPI for lightning-fast backends, WebSockets for live chat magic, and PocketFlow to keep things organized. Ready to make your web app feel like a real conversation? You can find the complete code for this part in the FastAPI WebSocket Chat Cookbook.

1. Why Your AI Web App Should Stream (It’s a Game Changer!) 🚀

Picture this: You ask an AI a question, then… you wait. And wait. Finally, BOOM – a wall of text appears all at once. Feels clunky, right?

Now imagine this instead: You ask your question, and the AI starts “typing” back immediately – word by word, just like texting with a friend. That’s the magic of streaming for AI web apps.

Why streaming rocks: It feels lightning fast, keeps users engaged, and creates natural conversation flow. No more “is this thing broken?” moments!

We’re creating a live AI chatbot web app that streams responses in real-time. You’ll type a message, and watch the AI respond word by word, just like the pros do it.

Our toolkit:

- 🔧 FastAPI – Blazing fast Python web framework

- 🔧 WebSockets – The secret sauce for live, two-way chat

- 🔧 PocketFlow – Our LLM framework in 100 lines

Quick catch-up on our series:

- Part 1: Built command-line AI tools ✅

- Part 2: Created interactive web apps with Streamlit ✅

- Part 3 (You are here!): Real-time streaming web apps 🚀

- Part 4 (Coming next!): Background tasks for heavy AI work

Want to see streaming in action without the web complexity first? Check out our simpler guide: “Streaming LLM Responses — Tutorial For Dummies“.

Ready to make your AI web app feel like magic? Let’s dive in!

2. FastAPI + WebSockets = Real-Time Magic ⚡

To build our streaming chatbot, we need two key pieces: FastAPI for a blazing-fast backend and WebSockets for live, two-way chat.

FastAPI: Your Speed Demon Backend

FastAPI is like the sports car of Python web frameworks – fast, modern, and async-ready. Perfect for AI apps that need to handle multiple conversations at once.

Most web apps work like old-school mail: Browser sends request → Server processes → Sends back response → Done. Here’s a basic FastAPI example:

from fastapi import FastAPI

app = FastAPI()

@app.get("/hello")

async def say_hello():

return {"greeting": "Hi there!"}

What’s happening here?

-

app = FastAPI()– Creates your web server -

@app.get("https://dev.to/hello")– Says “when someone visits/hello, run the function below” -

async def say_hello()– The function that handles the request -

return {"greeting": "Hi there!"}– Sends back JSON data to the browser

When you visit http://localhost:8000/hello, you’ll see {"greeting": "Hi there!"} in your browser!

Your First FastAPI App Flow:

Simple enough, but for chatbots we need something more interactive…

WebSockets: Live Chat Superpowers

WebSockets turn your web app into a live phone conversation. Instead of sending messages back and forth, you open a connection that stays live for instant back-and-forth chat.

Here’s a simple echo server that repeats whatever you say:

from fastapi import FastAPI, WebSocket

app = FastAPI()

@app.websocket("/chat")

async def chat_endpoint(websocket: WebSocket):

await websocket.accept() # Pick up the call!

while True:

message = await websocket.receive_text() # Listen

await websocket.send_text(f"You said: {message}") # Reply

The browser side is just as simple:

id="messageInput" placeholder="Say something..."/>

onclick="sendMessage()">Send

id="chatLog">