Committing to continuous discovery means changing the way your product team operates. It’s no longer about making decisions purely based on your intuitions or stakeholder requests, but finding ways to integrate touch points with customers into your work every week—if not every day.

Continuous discovery means not making decisions purely based on your intuitions or stakeholder requests, but finding ways to integrate touch points with customers into your work every week. – Tweet This

This can sound overwhelming. Your schedule may already be double-booked and you might already feel like there’s no room to add discovery to your workload.

We hope that today’s Product in Practice will change your mind.

Ellen Juhlin, a product coach and Product Talk instructor, shares several ways that she used in-app surveys to collect feedback and create regular touch points with customers.

In each instance, Ellen found a solution that didn’t require too much time or technical know-how to set up. While her results were far from perfect, they’re a great reminder that continuous discovery is a journey. The best way to begin is with small steps.

Do you have a Product in Practice story you’d like to share? You can submit yours here.

Quick Re-Introduction to Ellen Juhlin

If you’re a longtime Product Talk reader, you’ve taken a Product Talk Academy course, or you’ve spent some time in the Continuous Discovery Habits community, you probably already know Ellen Juhlin.

But just in case you haven’t met, Ellen is a Product Talk Academy instructor and regular contributor to the CDH community. She’s been featured in several blog posts on the Product Talk blog, including:

- Product Talk Is Growing: Meet New Instructor Ellen Juhlin

- Tools of the Trade: Finding People to Interview Before You Have Customers

- Leading the Change: How Ellen Juhlin Introduced Discovery Habits at Orion Labs

- Product in Practice: Getting Engineers Involved in Brainstorming

For today’s post, we’ll be looking at four different ways that Ellen has used in-app surveys to guide her discovery process. This topic was inspired by a question that came up in the CDH community, where a member was looking for specific tools people had used to run in-app surveys.

Here’s an overview of each use case we’ll be covering:

- Using a Mixpanel one-question survey to understand user types

- Adding a question to new user onboarding to determine use cases

- Recruiting users to interview with OneSignal, Typeform, and Calendly

- Prompting users to review quality with Typeform

Using a Mixpanel One-Question Survey to Understand User Types

A few years ago, Ellen was the Senior Director of Product Management at Orion Labs, a company that does voice communication, similar to a walkie-talkie, but on a mobile phone or via the web.

While Orion had started with a B2C focus, they got a lot of inbound interest in the B2B space. They were hoping to get a general sense of whether they had more consumer or business users.

In order to gather more data, one of Ellen’s coworkers set up a quick survey question pop-up in the mobile app. This survey would ask people whether they were using Orion for work or personal use.

At the time, Orion was already using Mixpanel (a product analytics tool) for collecting data and analytics. One of Mixpanel’s additional features was a pop-up that you could use to show an offer or link. The Orion team had previously used the pop-up to showcase a new feature that was available in the app or prompt people to update the version of the app they were using. (Ellen notes that this feature is no longer available in Mixpanel, but it was at the time.)

Mixpanel allowed users to create pop-up notifications to prompt them to update the version of the app they were using or ask them a simple question.

The way the pop-up worked in Mixpanel was that you could define a set of user parameters to show the pop-up to. “For instance, when we used it to showcase a new app feature, we only showed it to users running a certain version of the app. We could also show one pop-up to iOS users with an iOS screenshot, and a different one to Android users with an Android screenshot,” says Ellen.

However, Ellen says they were pretty constrained in terms of what they could do with the pop-up. “I remember there being limited options at the time for when we could show the pop-up, and so it had to be shown at the time the user launched the app. You had to keep an eye on which pop-up messages were enabled, otherwise users would get several in a row.”

Similarly, when it came to designing the pop-up, the capabilities were quite limited. There was a “what you see is what you get” (WYSIWYG) interface for designing the pop-up message, and you could add title text, an image, and up to two buttons with text labels. “Sometimes we would just create an image with the layout and text the way we wanted it, instead of using their text,” says Ellen.

With both the constraints of the design and this particular use case, Ellen says their results were not particularly helpful. She explains, “For this particular situation of trying to understand consumer vs. business, because the pop-up message was text-only in a different font, it looked like an ad. Most users just dismissed it entirely without answering, or would just pick something at random to make it go away. We had to do a lot of filtering to get the data, and then didn’t end up with enough real answers to draw any meaningful conclusions.”

But Ellen didn’t give up. She kept looking for ways she could collect quick feedback from customers as she made her daily product decisions.

Adding a Question to New User Onboarding to Determine Use Cases

More recently, Ellen was working as a product advisor with LiveTranscribe, an app that provided transcription of live conversations. At the time, they were trying to understand the percentage of users who were deaf or hard of hearing (using the app to better understand live conversations), vs. users who were not hard of hearing and were using the app to take dictation. They wanted this data to decide whether to focus more on creating solutions specifically for the dictation users.

They added a question into the new user onboarding process, so the user would pick one of two answers to the question “How do you want to use this app?” before they could start using the app. The answer was then stored alongside other metrics data for each user profile.

While all users answered this question during onboarding, Ellen later learned that their answers were not necessarily accurate.

At a later stage, when Ellen wanted to interview dictation users to learn more about their use case, she used OneSignal (a customer messaging tool that enables in-app messaging and push notifications) to target a pop-up message only to users who had indicated they wanted to use the app for dictation.

The pop-up asked if they’d be willing to participate in a paid interview and prompted them to fill out a short recruiting survey if they were interested. But when the survey asked if they used the app for understanding conversation or for dictation, the product team discovered that most of the users who filled out the survey were using the app for conversations—even though they had previously indicated they used it for dictation!

What happened here? Ellen says, “My theory is that asking users to self-describe their use case is not a reliable data point, especially when asked out of context.” She thinks asking how someone will use the app is tricky for them to answer because it’s a speculative question so early in their journey. They might not know themselves, and during onboarding they haven’t gotten any value from the app yet, so they’re not motivated to answer accurately.

My theory is that asking users to self-describe their use case is not a reliable data point, especially when asked out of context. – Tweet This

In retrospect, Ellen says she’d try different approaches, such as asking the users each time they want to use the transcription function whether it was for self-dictation or for understanding others. She’d also look at the usage of dictation-specific features, like the frequency of exporting the transcription.

Setting up a One-Question Survey with OneSignal, Typeform, and Calendly to Recruit Users to Interview

A key part of discovery is conducting interviews with your users. During Ellen’s time as a product manager with Sound Amplifier (an early-stage mobile app designed to help people with mild hearing loss hear conversations and movies more easily using headphones they already have) she had an MVP version of the app and wanted to learn more from users at the time. Who were the people who sought out this kind of app and what did they use it for? What other features might they build to make the app more useful? She figured the best way to answer these questions was by talking directly with users.

The company already had OneSignal, which allowed them to trigger a pop-up based on certain behaviors. Ellen could decide what might be a meaningful moment, such as the third time someone opened the app or the fifth time they pressed a particular button.

OneSignal allowed her to design a pop-up that linked to a Typeform survey where she could get more demographic information and prioritize who she wanted to talk to. She’d respond to everyone who filled out the Typeform survey with a message that said, “Thanks for responding, we’ll let you know if you’ve been selected to participate.”

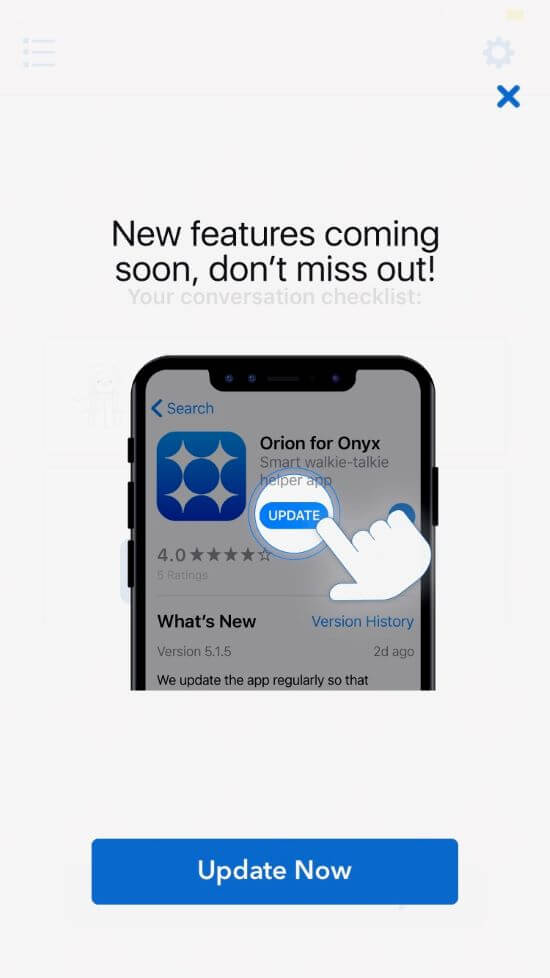

For the pop-up itself, you have the ability to choose to add an image and select from a few colors and fonts, but it’s still quite limited. “You have very little control over it fitting into the style of the rest of your app,” says Ellen. This means if aesthetics are important to you, it won’t look as polished as everything else in your app.

On the plus side, OneSignal can connect to Amplitude, which allows you to set up different cohorts of users.

Customer messaging tool OneSignal can create pop-ups like this one that prompt users to participate in an interview.

Because they were sending people to Typeform, they could also set up hidden variables that passed through from OneSignal to Typeform. This meant that they could attach information like the user ID to the Typeform page and review it as part of the responses users provided. With the user ID, they could look the person up in Amplitude and see how active a user they were.

They could also see if it was someone who’d been active multiple times over the past month or used the app a certain number of times so they could define a cohort. This was helpful because they didn’t want a first-time user or someone who had only used the app once in the past year. Their goal was to target and speak with people who had been using the app regularly.

When setting up the Typeform survey, Ellen says it was important to write the questions in a way that would filter the type of user they wanted without revealing what they were looking for. (This is a topic Ellen covered in more detail in a previous Tools of the Trade on interviewing people before your product has customers.)

Once she felt confident in the logic of her survey, Ellen directed people to a Calendly link so they could book a time to talk. Ellen says, “I think this approach is more effective when you’re recruiting in-app as opposed to ‘We’ll let you know’ and then reaching out with a calendar link. That’s another kind of cycle vs. ‘Yes we really want you! Come get your gift card. Click this link.’”

Ellen also mentions that when you link from Typeform to Calendly, it’s possible to set it up so Calendly can prefill the user’s name, email address, and user ID.

It’s worth spending some time thinking about what information you’d like to collect at each step. Ellen says, “It’s kind of a balancing act of asking for the right information at the right time. If the first question of the survey is, ‘What’s your email address?’, they might just stop then because they don’t know what they’re getting out of it. But if you ask them a couple questions and then you decide yes, you want to interview them and say, ‘Please enter your email to continue,’ then it’s clear that the purpose is to get something.”

When setting up in-app surveys, it’s kind of a balancing act of asking for the right information at the right time. – Tweet This

While this system wasn’t perfect, it worked pretty well for Ellen’s needs. She says the downside of this approach was that it did take a bit of coordination since she was working across several tools. She had to set up the survey and add logic to make sure the right people were going to the Calendly link and coordinate with the developer who was filling in some of the hidden variables behind the scenes. “Any time you go from one tool to another, you need to make sure it’s getting the right information and importing the cohorts from Amplitude, so there’s a lot of set-up,” says Ellen.

One of the plus sides according to Ellen was the fact that she had a lot of flexibility to be able to target different things. While it did take some work to get exactly what she wanted, this was a process that took days or weeks vs. months.

Ultimately, Ellen considered this process a success. She was able to get multiple interviews scheduled and learn more about specific use cases from these interviews.

Using Typeform to Prompt Users to Rate a New Product

Another product Ellen was working on, Ramble, was a web app that would collect a voice recording of someone speaking and then use AI to summarize the main points of what they’d said. Ellen had some ideas of use cases—like generating a to-do list or paraphrasing a lengthy entry—but she also had a hunch that there might be other use cases she hadn’t identified yet.

Because this was a new product and there was a lot of dropoff among beta testers, Ellen wanted to understand the root of the problem: Was the app just not integrated into people’s routines? Was the output not good enough? Or was there something else that was preventing people from continuing to use it? Essentially, Ellen wanted to test the risky assumption that the output was good and the app was useful to people.

There were a few developers on the team who had experience with different systems, so they considered a few possibilities of how they could measure quality, like how a product like Google Meet prompts you to rate the quality of a call when it ends. “It seemed like it must be easy because a lot of people are doing it in the product world,” says Ellen.

But as she began looking into it, Ellen learned that a lot of the packaged products you can plug in to your app are pretty pricey for a small startup, especially at scale. Rather than make a monetary investment, she wanted to consider if there was something they could do with the tools they already used.

At the same time, Ellen says they realized they didn’t want to build something custom because it quickly got complicated with needing a way to easily store and review the answers and/or build a whole database of survey responses.

At this stage, Ellen says they started looking at the tools they already used. Was there something that might work for this purpose as well?

Because they already used Typeform, they started looking at how they could make it show up in the product in the right way and how they could trigger it at the right time. “It doesn’t make sense to ask for feedback right when they log in because they haven’t done anything, so we wanted to make sure we asked at the right time and made it so it wasn’t blocking their tasks—an optional, ‘Hey, we’d like to know this,’ but it’s not getting in the way of their other tasks, too,” explains Ellen.

It doesn’t make sense to ask users for feedback right when they log in because they haven’t done anything yet, so we want to make sure we ask at the right time and avoid blocking their tasks. – Tweet This

By looking at the various software development kit (SDK) options that Typeform had, Ellen discovered that she could add a little tab on the side of the page that can animate in so it draws a little bit of attention without blocking other things.

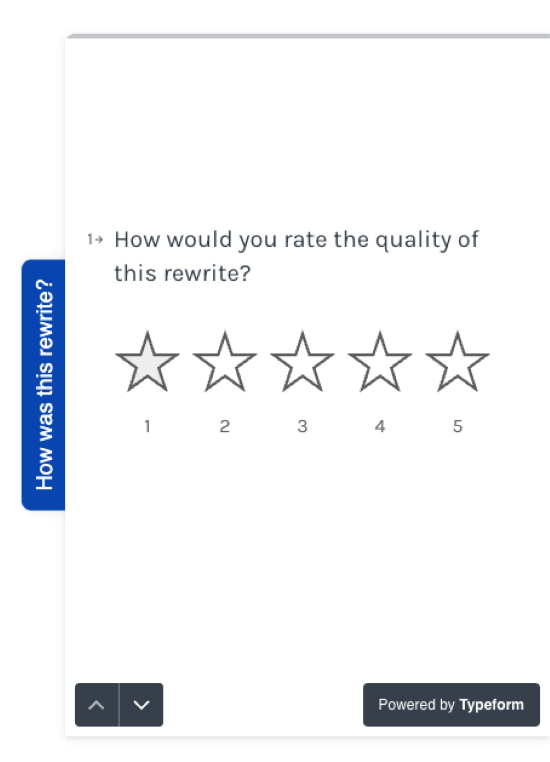

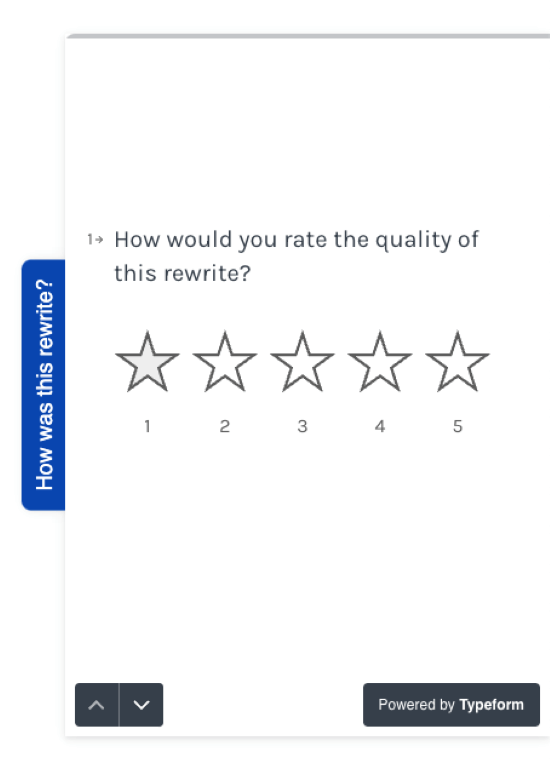

With Typeform, you can set up a pop-up to appear on the side of the page so it doesn’t interrupt a user’s flow when they’re in the middle of a task.

She could add a question like, “How was this rewrite?” and include a row of stars under it so the user could click on the question and rate 1 to 5 stars. This was an easy, low-barrier way to get input.

If someone clicked on the side tab shown above, it would prompt them to provide a star rating for the quality of the output.

If the person clicked on the stars to add their rating, they could follow up by asking, “Why did you give that rating?”

If the person was logged in to the app, the product team had access to their email address and could follow up if they had additional questions. Ellen says it was important not to look at the output users had created without getting their explicit consent. “That’s their private information and building trust was important. We didn’t want to read it, but we did want to know what they thought of it.”

They didn’t have to ask what settings a user had chosen for their output because they could collect that from the web app. That saved the user a step of having to input that and potentially get it wrong. “In hindsight, it might have been interesting to collect what they thought they were getting and compare against what the product had actually provided,” muses Ellen.

While the product team didn’t run the rating request for too long, Ellen says it was still beneficial. “It was helpful as both our internal developers and external beta testers could respond as well. Even for internal testers it was a helpful way to get feedback and see where we needed to upgrade things.”

Overall, Ellen says Typeform makes it really easy to set something like this up. “It’ll provide you with the code that you give to your developer. You can also do a Slack integration, so as soon as someone fills out a form, you get a notification in Slack so you can go look at it and respond to it immediately or reach out to the person.”

Typeform has a Slack integration, so as soon as someone fills out a form, you get a notification in Slack so you can go look at it and respond to it immediately. – Tweet This

This also ensured product team members stayed up to date on the feedback users were providing. “Instead of needing to remind people to go look at the Typeform responses, you get those notifications automatically.”

The advantage of using Typeform in this case was that they already had a subscription to it and Ellen was already quite familiar with it. “It’s pretty easy to use and being able to see things as they come in and not spend a lot on a custom solution or an expensive third-party package—this was a fast solution.”

Ellen also believes it was a good experience for users. “The interface when people are filling out the form is pretty clean, clear, and it doesn’t look like an ad.”

The main disadvantage was that they didn’t have access to partial form fills. If someone clicked the number of stars but didn’t fill out the rest of the form or just closed it, they’d lose that information. However, Ellen notes that it might be possible to work around this, but she didn’t have the opportunity to investigate it in more depth.

Overall, Ellen was satisfied with the Typeform experience: “I was pleasantly surprised that I was able to do this just with the Typeform plugin and instead of coding something in, which would have required going back to the developer any time I wanted to change the wording or something else. With Typeform, if I realized the question wasn’t clear or I wanted to add another response option, then I could just go in and spend five minutes doing that.”

Key Learnings and Takeaways

Looking back on these experiences with driving discovery through different in-app surveys, Ellen has a few key learnings and takeaways to share.

Weigh the benefits and drawbacks of different approaches. You can build a custom tool (which takes a lot of time and money), purchase a specific tool (which is quick but potentially expensive), or use an existing tool (it might take some time to get it set up but doesn’t require additional budget). Here’s Ellen’s take on each approach.

- Building a custom tool: “Building a custom system to ask one question and store the results in your user data has the benefit of looking more integrated with the rest of the product, but it doesn’t scale well. If you want to change the question, your developer has to change where the data goes, change the UI, roll out a new version, etc.”

- Purchasing a specific tool: “There are a lot of cheaper tools for adding in a pop-up at a particular time with a yes or no question, but if you want to ask a nuanced question (rate the quality) with follow-up questions (why did you give that rating?), you’re looking at a more expensive tool, or a less-integrated feel. And remember that being able to store and review the results easily is a harder problem than showing the user a pop-up message.”

- Using an existing tool: “You will be able to iterate faster with a tool that makes it easy to change the content and even targeting without requiring additional developer time. We didn’t want to spend a week or two waiting on a developer to build a custom survey tool when we had hired them to build our product. But a ticket saying ‘I need to trigger this tool based on this specific user behavior’ is easier than ‘Build a new survey tool that stores results where I can easily review them.’”

Make sure you’re keeping the user’s experience top of mind. Don’t ask for their email if they haven’t gotten any value out of your product yet. Similarly, don’t disrupt them before they’ve had a chance to try out the product.

Avoid speculative questions. “Just like in interviews, if you ask a speculative question, you will get an inaccurate answer that doesn’t reflect reality (if you get an answer at all),” says Ellen. She recommends only asking questions during onboarding that will critically affect their experience. For example, in SoundAmplifier, they asked the user whether they were using wired or Bluetooth headphones so they could guide them through the appropriate setup process.

Just like in interviews, if you ask a speculative question in-app, you will get an inaccurate answer that doesn’t reflect reality (if you get an answer at all). – Tweet This

Be mindful of people’s motivation for participating. When recruiting users to participate in interviews (especially when you’re offering a financial incentive), make sure you don’t give away exactly what you’re looking for. You don’t want to unintentionally encourage people to take the survey many times until they’re a match.

Still looking for more help creating in-product surveys? Come join us in the next cohort of Assumption Testing, where you’ll learn how to run effective in-product surveys to test your assumptions in a supportive environment with like-minded peers.

The post Product in Practice: Getting Value Out of In-App Surveys Takes Iteration appeared first on Product Talk.

Product in Practice: Getting Value Out of In-App Surveys Takes Iteration was first posted on May 15, 2024 at 6:00 am.

©2022 “Product Talk“. Use of this feed is for personal non-commercial use only. If you are not reading this article in your feed reader, then the site is guilty of copyright infringement. Please let us know at support@producttalk.org.