[ML Story] Exploring Generative AI using MakerSuite and PaLM API

Your go-to solutions to prototype and dive into Large Language Models by Google

Introduction

The way we approach problem-solving as humans has undergone a significant transformation with the widespread adoption of Large Language Models (LLMs). In the past, tackling tasks such as summarizing documents or classifying a sentence with a computer necessitated the creation of a complex program— essentially a set of meticulously crafted commands in a specific programming language. However, with the advent of LLMs, addressing these challenges now simply involves providing a textual prompt.

Today, describing the desired appearance of a website allows us to obtain not only a comprehensive set of files but also, in certain cases, a pre-deployed application. This seamless process is made possible through the integration of automation and specialized tools, streamlining the development and deployment of websites.

But to get the most powerful results from LLMs, it is important to explore different techniques. In this article, I will present a beginner’s strategy for Prompt Engineering, intending to cover more advanced techniques in the subsequent articles of this series. To accomplish this task, I will utilize MakerSuite and the Palm API. Assuming you are already familiar with Large Language Models, I will skip introductions to this particular subject and instead introduce you to the tools we will be using in this tutorial.

MakerSuite and PaLM API

Like GPT models, PaLM is a transformer-based large language model offered by Google as an API service. The interpretation of multiple LLM providers (OpenAI, Google, Anthropic…) can be valuable for making the right design decisions for your application, such as model selection and limitation identification.

At the time of this article, you can access PaLM APIs using three different methods: MakerSuite, Vertex AI Libraries, and Vertex AI APIs. The last two are suitable for those who are already familiar with GCP and its ML infrastructure. You also gain access to all available models on Google using these methods.

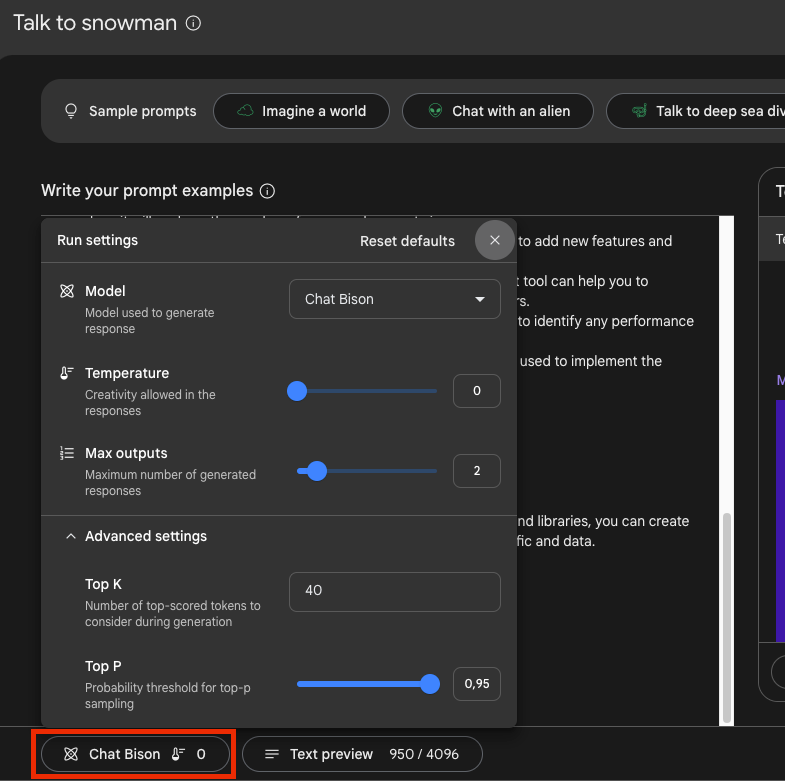

In this article, we will focus on MakerSuite to access our models. MakerSuite is powerful due to its friendly interface (similar to common LLMs API providers) and is very easy to set up, requiring only an API key. You can prototype your application with a set of prompts, datasets, and custom-tuned models. It is easy to play around with the interface, allowing you to change the standard features of the model — such as the type of the model, temperature, max outputs, etc. As a final result, you are able to see the code and embed it in your own apps.

Google has made it easy to set up access to the Palm API on MakerSuite. Now, you don’t need to set up a Google Cloud Platform environment, configure service account roles, etc., as you would have to do using Vertex AI Libraries and APIs. Users can easily access a specific model outside the common console from Google Cloud.

At the time of this article, MakerSuite is not yet available in Brazil, requiring access through a VPN. However, in most countries, it is already accessible, so it should become available worldwide soon.

When accessing MakerSuite, a crucial message promptly appears, highlighting important considerations, particularly if you intend to deploy these models in production without response checks, such as the implementation of guardrails.

The APIs are currently in limited general availability. You may use them for production use but Google may enforce rate limits during this period.

Don’t submit sensitive (e.g., confidential) or personal information.

PaLM API is experimental technology and may sometimes provide inaccurate or inappropriate information that doesn’t represent Google’s views.

Don’t rely on responses as medical, legal, financial, or other professional advice.

As you proceed, obtain an API key (accessible through the menu once you accept the preceding terms). Remember to treat this key with the utmost confidentiality — do not share it with anyone and securely store it, as you won’t be able to retrieve it later, necessitating the creation of a new one.

In the Prompt Gallery, you can discover various approaches for your applications, categorized by Google into three sections: ‘Text,’ ‘Data,’ and ‘Chat.’ Depending on the scenario, a model will be assigned — text-bison-001 (e.g., summarization, QnA) or chat-bison-001 (e.g., iterative applications like chatbots and conversations).

Text use-cases

Bison is one of the largest models developed by Google. le. While it may not be the largest (in ascending order, the sequence is Gecko, Otter, Bison and Unicorn), it still delivers exceptional results.

To create a text model example, I navigate to the Prompt Gallery and choose the ‘Style rewrite’ example, which is useful for rewriting text in a specific style.

When opened in MakerSuite, you’ll find the interface with predefined settings for this use case. The most interesting part is the ease of attaching it to your app with the “Get Code” functionality. This button opens a pop-up with code similar to the example below:

!pip install google-generativeai

import google.generativeai as palm

palm.configure(api_key="YOUR API KEY")

defaults = {

'model': 'models/text-bison-001',

'temperature': 0.9,

'candidate_count': 1,

'top_k': 40,

'top_p': 0.95,

'max_output_tokens': 1024,

'stop_sequences': [],

'safety_settings': [{"category":"HARM_CATEGORY_DEROGATORY","threshold":1},{"category":"HARM_CATEGORY_TOXICITY","threshold":1},{"category":"HARM_CATEGORY_VIOLENCE","threshold":2},{"category":"HARM_CATEGORY_SEXUAL","threshold":2},{"category":"HARM_CATEGORY_MEDICAL","threshold":2},{"category":"HARM_CATEGORY_DANGEROUS","threshold":2}],

}

prompt = f"""The girl walked to the store, her pockets full of change. She hopped and skipped and danced her way downtown, change rustling like a tambourine. She had been saving up for weeks, and today she was extremely excited, as it was the day she was finally going to buy a pack of her favorite bubble gum. She couldn't wait to blow the biggest bubbles in the world.

Rewrite this story as a superhero origin story.

"""

response = palm.generate_text(

**defaults,

prompt=prompt

)

print(response.result)

I used the Python code snippet, but it’s also available in other languages.

With the code snippet above, we can already observe relevant details:

- It was already generated using the text model, in models/text-bison-001.

- In addition to the common configurations found in the setup of other LLMs (such as temperature, top_k, top_p…), we have the safety_settings, which I will highlight now. This parameter helps us align with Responsible AI principles, mitigating potential harm, toxicity, and violence in our applications. You can configure your openness to accepting each type of content, blocking medium or high probabilities of being unsafe, for example.

In the pop-up, you should see a “Open in Colab” button, making it easy for you to delve into your example in the Cloud using the notebook environment you are already familiar with.

Chat use-cases

If you’re planning to develop a chatbot or a conversational application, your go-to model should be a Chat one, specifically chat-bison-001.

Returning to the Prompt Gallery, navigate to the ‘Talk to snowman’ example. However, in our case, we’ll make some adjustments to make it more suitable for a professional scenario.

Upon opening in MakerSuite, proceed to “Get Code” again and click on the “Open in Colab” option. In Google Colab, click on “Connect” on the right side of the screen. Afterward, modify the configuration to include your API key in the second cell. Following these steps, you are ready to go and can run all the cells.

You should see output similar to the example below:

Yes, I melt. I’m made of snow, and snow melts when it gets warm. I’m not sure how long I’ll last, but I’m enjoying my time here while I can!

It’s interesting, but unless your company is in a field related to snowmen or it’s during the Christmas period, you probably won’t use an example exactly like this in your applications. To create something more aligned with our reality, I’ll return to MakerSuite to demonstrate how you can do it in the UI (you can also do it in Colab, but for this tutorial, I want to showcase the interaction with the interface).

First, keep only one example of interaction between the user and the model, removing all others.

In “Context”, replace the current text with the following one:

Act as a Senior Software Engineer. You are an expert in designing distributed systems and takes into account scalability and design patterns in our solutions. Answer the questions with short responses.

Add the following in the “User Input”:

How can I create a scalable Python architecture?

For the “Model Input”, paste the following (generated using Bard):

To create a scalable Python architecture, you need to consider the following factors:

Use concurrency and parallelism. Python has a number of features that can be used to implement concurrency and parallelism, such as threads, processes, and asynchronous programming. By using concurrency and parallelism, you can distribute your workload across multiple cores and processors, which can improve the performance and scalability of your application.

Use a message queue. A message queue is a distributed messaging system that can be used to decouple different components of your application. This can make your application more scalable and resilient to failures.

Use a distributed database. A distributed database is a database that is spread across multiple servers. This can improve the performance and scalability of your application by distributing the load across multiple machines.

Use a caching layer. A caching layer can be used to store frequently accessed data in memory. This can improve the performance of your application by reducing the number of database queries that need to be made.

Use a load balancer. A load balancer can be used to distribute traffic across multiple instances of your application. This can improve the performance and scalability of your application by handling increased traffic.

Here are some additional tips for creating a scalable Python architecture:

Design your application with scalability in mind. When designing your application, think about how it will scale as the number of users and requests increases.

Use a modular architecture. A modular architecture makes it easier to add new features and scale your application.

Use a configuration management tool. A configuration management tool can help you to manage the configuration of your application across multiple servers.

Monitor your application. It is important to monitor your application to identify any performance bottlenecks or scalability issues.

There are a number of Python frameworks and libraries that can be used to implement the above principles. Some popular options include:

Celery for asynchronous programming

RabbitMQ for message queuing

PostgreSQL and MySQL for distributed databases

Redis for caching

Gunicorn and Nginx for load balancing

By following the above tips and using the appropriate frameworks and libraries, you can create scalable Python architectures that can handle large volumes of traffic and data.

Now, in the “Test your prompt” window, type “And how would I do in Java?”, exactly as shown, and we are ready! You can observe how your model performs with this provided example.

You can also modify other configurations by clicking on the model’s name in the top left corner of the screen.

Closing remarks

Thank you so much for reading this article. It’s the first in a series that I’m planning to write related to Prompt Engineering and how to address various challenges we may encounter with Large Language Models. Expect more advanced articles in the future.

Due to the rapid advancements in this field, this tutorial may become outdated quickly. I’ll review it periodically to see if any adjustments are needed.

I hope you found it helpful, and I look forward to your feedback!

Exploring Generative AI using MakerSuite and PaLM API was originally published in Google Developer Experts on Medium, where people are continuing the conversation by highlighting and responding to this story.

![[ml-story]-computer-vision-made-easy-with-google-cloud-vision-api](https://prodsens.live/wp-content/uploads/2023/10/15527-ml-story-computer-vision-made-easy-with-google-cloud-vision-api-110x110.jpeg)