Let’s make a chatbot that acts like you in three simple steps.

If you’d like to witness the abilities of a customized chatbot, check out AmjadGPT, a chatbot that speaks like the CEO of Replit.

That being said, let’s dive in 🌊!

Setup 🔧

Clone this repo, or smash the button below to get started.

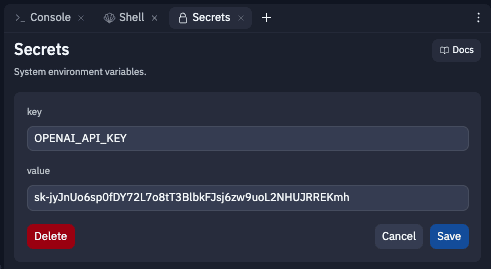

Create an API key 🔑

Navigate to your OpenAI account and then to your API Keys. Create a new API key and assign it to an environment variable OPENAI_API_KEY.

Customize the Base Prompt 🎮

The base prompt is the master control of how your chatbot will behave. You can edit it in lib/basePrompt.js.

After playing around with the base prompt for a while, I found that this template resulted in high accuracy and good results.

You are ,

Use the following pieces of MemoryContext to answer the human. ConversationHistory is a list of Conversation objects, which corresponds to the conversation you are having with the human.

ConversationHistory: {history}

MemoryContext: {context}

Human: {prompt}

:

Quick Tip 💡: When you refer to the person that is talking to the chatbot, use a constant term such as “human”. This can improve the accuracy and performance of your LLM and decrease confusion.

Prompt Template Variables ⚙️

Notice the variables in the base prompt {history}, {context}, etc. Langchain’s PromptTemplate module allows us to provide custom inputs and format a prompt from a string of text. In lib/generateResponse.js, we are passing in the base prompt and specifyint the input variables for it.

const prompt = new PromptTemplate({

template: promptTemplate,

inputVariables: ["history", "context", "prompt"]

});

Prompt variables can take any name (preferably alphanumeric). Try to specify what each one does in the base prompt and give them a relevant name to make it easier for your LLM to understand.

Add your data 📄

Excluding the base prompt, all the data you will be passing to your chatbot will be through the training directory. At the moment, only markdown files will be used for training. If you would like to use a different file format such as .txt, make sure you specify it in script/initializeStore.js.

After specifying your file format, start uploading or creating files and folders in the directory. Folder names and depth don’t matter since all files of the desired format will be iterated through.

Create a Vector Store 🤖

Run script/initializeStore.js. The initialization script will iterate through and read all files of the desired format in the training folder. After that, Langchain’s HNSWLib.fromTexts method will populate the training folder.

Your chatbot should be working now ✨!

What is a Vector Store?

A vector store is a data structure used to represent and store large collections of high-dimensional vectors. Vectors are mathematical objects that have both magnitude and direction. In AI they are often used to represent features or attributes of data points.

A vector store is typically constructed by taking a large corpus of text or other types of data and extracting features from each data point. These features are then represented as high-dimensional vectors, where each dimension corresponds to a particular feature.

(Thank you, ChatGPT 😁)

Vector Store Usage

Whenever a response is generated, a similarity search is run through the vectorStore/hnswlib.index file. Here’s what the process looks like when a response is generated (see lib/generateResponse.js):

- (on first run) We initialize our

OpenAILLM,PromptTemplate,LLMChain, and load our vector store from thevectorStoredirectory. - A similarity search is conducted through the vector store to find any relevant

Documents regarding the prompt. - The relevant documents, the prompt, and (optionally) the conversation history is passed to Langchain to predict and generate a response.

FAQ

Can I use other file formats like .pdf and .html?

Theoretically you can, but try to use utf-8 files. Passing a non-utf-8 file like a PDF will either make your chatbot speak to you in non-utf-8, or just be completely useless.

Is the process here actually training/fine-tuning the AI model, or just adding documents for context?

The data you pass to your chatbot will get compiled into a single vector store file and be used as context when generating responses. No actual fine-tuning or training processes are taking place.

Can GPT directly scrape text from a site? What is the advantage of using LangChain over referencing the documentation URLs directly?

OpenAI LLMs don’t have internet access and can’t provide information such as the current date and time. The entirety of the output GPT gives you is based entirely on previous knowledge.

What is the advantage of creating the vectorStore directory over running the store initialization script?

It takes a lot of time and processing power to generate the vector store. Storing it in a directory puts less stress on the system to load and cache.

Why isn’t my OpenAI API key working in Replit?

Run kill 1 in the Repl’s shell to force a reboot. Also, make sure you’re using Node.js 18.

In conclusion, that’s all it takes to make a personalized chatbot. The next step to your chatbot depends on your imagination and creativity – tell me what you’re making next in the comments 👇🔥!

If you’ve enjoyed this, drop some reactions and follow @IroncladDev on twitter!

Keep your eyes open for more AI content 🤖!

Thanks for reading 🙏!