Browsing Tag

Gemma

11 posts

Pipeline to create .task files for MediaPipe LLM Inference API

Pipeline to create .task files for MediaPipe LLM Inference API Written by Georgios Soloupis, AI and Android GDE. MediaPipe logo…

On-Device Function Calling with FunctionGemma

A Practical Guide to preparing and using Google’s smallest function calling model for Mobile and Web Developers Why…

Fine-Tuning Gemma with LoRA for On-Device Inference (Android, iOS, Web) with Separate LoRA Weights

Prologue Lately, I’ve become deeply interested in working with Edge AI. What fascinates me most is the potential…

PaliGemma on Android using Hugging Face API

Introduction At Google I/O 2024, Google unveiled a new addition to the Gemma family: PaliGemma, alongside several new…

Next-Gen RAG with Couchbase and Gemma 3: Building a Scalable AI-Powered Knowledge System

Introduction Retrieval-Augmented Generation (RAG) is revolutionizing AI applications by combining the power of retrieval-based search with generative models.…

PaliGemma Fine-tuning for Multimodal Classification

Creating more dependable and accurate machine learning models nowadays depends on combining data from several modalities, including text…

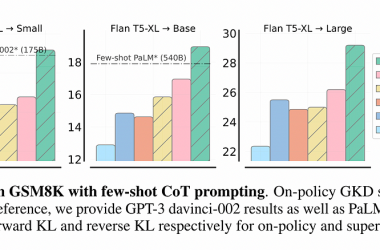

Online Knowledge Distillation: Advancing LLMs like Gemma 2 through Dynamic Learning

Large Language Models (LLMs) are rapidly evolving, with recent developments in models like Gemini and Gemma 2 bringing…

[ML Story] Part 3: Deploy Gemma on Android

Written in collaboration with AI/ML GDE Aashi Dutt. Introduction In the preceding two articles, we successfully learned how to…

Fine-tuning Gemma with QLoRa

Fine-tuning language models has become a powerful technique for adapting pre-trained models to specific tasks. This article explores…

Fine Tuning Gemma-2b to Solve Math Problems

Mathematical word problem-solving has long been recognized asa complex task for small language models (SLMs). To reach a…

![[ml-story]-part-3:-deploy-gemma-on-android](https://prodsens.live/wp-content/uploads/2024/04/22445-ml-story-part-3-deploy-gemma-on-android-380x250.gif)