Google AI Edge provides the tools to run AI features on-device, and its new LiteRT-LM runtime is a significant leap forward for generative AI. LiteRT-LM is an open-source C++ API, cross-platform compatibility, and hardware acceleration designed to efficiently run large language models like Gemma and Gemini Nano across a vast range of hardware. Its key innovation is a flexible, modular architecture that can scale to power complex, multi-task features in Chrome and Chromebook Plus, while also being lean enough for resource-constrained devices like the Pixel Watch. This versatility is already enabling a new wave of on-device generative AI, bringing capabilities like WebAI and smart replies to users.

Related Posts

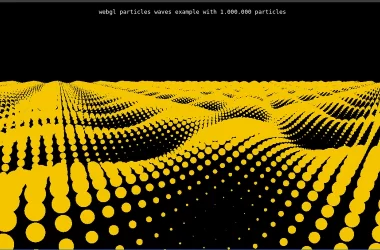

🧠 From 2,500 to 1,000,000 Particles: Supercharging a Three.js Demo with WebAssembly

When I first explored the classic webgl_points_waves demo in Three.js, I was amazed by the simplicity and elegance…

Google for Games is coming to GDC 2024

Join us on March 19 for the Game Developers Conference (GDC) where game developers will gather to learn,…

Variable scope in C programming.

A variable scope can determine which variable can be accessed from which region of the program. In C…