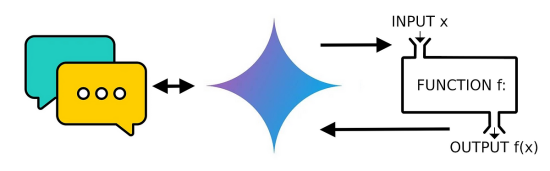

Large Language Models (LLMs), by nature of their pre-training, traditionally struggle to provide answers based on current, real-time data. However, function calling overcomes this limitation. I’ll demonstrate how to use it with the Gemini API on Vertex AI.

Why function calling?

When generative AI first came onto the scene, one of the tasks it was surprisingly bad at was math, despite the long-established presence of mathematical functions and libraries in programming. Why did it perform so poorly?

Much like how our brains use distinct areas for language and math, Large Language Models (LLMs) are primarily trained on linguistic data, leaving their “math” abilities underdeveloped. Imagine knowing you need to multiply 4 x 25, but never having learned multiplication itself. The solution to this problem was quite clever: if LLMs haven’t learned math, simply give them a calculator!

This is the core idea behind function calling, an early and effective solution. One the LLM identifies the need for a math operation, simply the numbers and delegate the actual calculation to an external function designed specifically for that purpose.

What about real-time data queries?

If you asked Gemini (or any LLM) to help you with finding upcoming showtimes for a movie, it would struggle because it’s pre-trained on past data, so its knowledge is cutoff past a certain date. The LLM can understand your question (i.e. “What time is Moana 2 showing at Cineplex tomorrow?”), but it just doesn’t have the knowledge to answer it. Using function calling, you can extract the key pieces of information (i.e movie, theatre, date) and pass it to a function that can send queries to a movie showtimes API to get you the answer you’re looking for.

Example code requirements

I have some sample code that will help illustrate the power of function calling. To run the code I’ve provided, you will need to install the Vertex AI SDK for Python, google-cloud-aiplatform.

I also highly recommend increasing your Vertex AI quota for “Generate content requests per minute per project per base model per minute per region per base_model”. The default is 5, but I recommend increasing it to 10 or higher.

Math example

Let’s start with a math example. The code below queries the Vertex AI Gemini API. I’ll ask the same questions to a two chat models and demonstrate that, while the answers are identical, the reasoning process differs.

https://medium.com/media/741ab8d4696849b2adf638c88d297102/href

NOTE: you can comment out all the “INFO” print statements if you wish to just see the final response.

Part 1: Math without function calling

basic_model = GenerativeModel(

model_name=model_name,

generation_config=GenerationConfig(temperature=0),

)

Nothing fancy here. I’m just submitting my questions to Gemini with its temperature set to 0. Here is a sample response (your response may or may not have the emjois in it):

-------------------BASIC CHAT-----------------

Initial response: role: "model"

parts {

text: "Sam is Bear's owner. 🐶 n"

}

Sam is Bear's owner. 🐶

Initial response: role: "model"

parts {

text: "There are **2** pizzas left. 🍕🍕nnHere's how to solve it:nn* **Start with the number of pizzas Bob brought:** 4n* **Subtract the number Charlie dropped:** -2n* **Total pizzas left:** 4 - 2 = 2 n"

}

There are **2** pizzas left. 🍕🍕

Here's how to solve it:

* **Start with the number of pizzas Bob brought:** 4

* **Subtract the number Charlie dropped:** -2

* **Total pizzas left:** 4 - 2 = 2

Initial response: role: "model"

parts {

text: "Here's how to solve the problem step-by-step:nn**1. Find the total number of pizzas:**nn* Alice's pizzas + Bob's pizzas + Charlie's pizzas = Total pizzasn* 12 + 8 + 5 = 25 pizzasnn**2. Calculate the total number of slices:**nn* Total pizzas * Slices per pizza = Total slicesn* 25 * 8 = 200 slicesnn**Answer: There are a total of 200 slices of pizza.** 🍕 n"

}

Here's how to solve the problem step-by-step:

**1. Find the total number of pizzas:**

* Alice's pizzas + Bob's pizzas + Charlie's pizzas = Total pizzas

* 12 + 8 + 5 = 25 pizzas

**2. Calculate the total number of slices:**

* Total pizzas * Slices per pizza = Total slices

* 25 * 8 = 200 slices

**Answer: There are a total of 200 slices of pizza.** 🍕

The responses here weren’t all that surprising given how Gemini (and LLMs in general) has improved considerably with better training data and with a bit more focus on math and computational capabilities.

Now let’s see how this example would’ve worked using function calling…

Part 2: Math WITH function calling

You will notice the generative model declared here is a little different. I needed to specify an additional system_instruction so that Gemini does not try to solve the math questions on its own like in the first part of this example.

model = GenerativeModel(

model_name=model_name,

generation_config=GenerationConfig(temperature=0),

system_instruction="Do not do any math yourself, but answer the user's question correctly.",

tools=[math_tool],

)

I also declared some tools it can use in the form of functions that had declared. I also wrote a handle_response(response) function that will parse the response parts and act accordingly. It will call another function if necessary, or output the final response if it’s just text.

-------------------CHAT w/FUNCTION CALLING-----------------

Initial response: role: "model"

parts {

text: "Sam is Bear's owner. n"

}

Sam is Bear's owner.

Initial response: role: "model"

parts {

function_call {

name: "add_function"

args {

fields {

key: "second_number"

value {

number_value: -2

}

}

fields {

key: "first_number"

value {

number_value: 4

}

}

}

}

}

Calling Add function

Function call response: role: "model"

parts {

text: "There are 2 pizzas left. n"

}

There are 2 pizzas left.

Initial response: role: "model"

parts {

function_call {

name: "add_function"

args {

fields {

key: "second_number"

value {

number_value: 8

}

}

fields {

key: "first_number"

value {

number_value: 12

}

}

}

}

}

Calling Add function

Function call response: role: "model"

parts {

function_call {

name: "add_function"

args {

fields {

key: "second_number"

value {

number_value: 5

}

}

fields {

key: "first_number"

value {

number_value: 20

}

}

}

}

}

Calling Add function

Function call response: role: "model"

parts {

function_call {

name: "multiply_function"

args {

fields {

key: "second_number"

value {

number_value: 8

}

}

fields {

key: "first_number"

value {

number_value: 25

}

}

}

}

}

Calling Multiply function

Function call response: role: "model"

parts {

text: "There are 200 slices in total. n"

}

There are 200 slices in total.

As you can see, in the first question that does not involve math, the response part is simply text and thus the response “Sam is Bear’s owner”. However, for the math problems, Gemini recognizes the need for a function call, and extracts the necessary numbers as arguments, which then get passed during the function call. Gemini handles the comprehension and logic of the question, delegates the calculation to the appropriate function, and then provides the user with the final answer.

Weather API example

While the previous math example provided background on function calling, it wasn’t a practical real-world application. Here’s a more relevant example, using similarly structured code:

https://medium.com/media/132e68495976028502e46bfbca7f41ec/href

I chose Open-Meteo because you can use their API without signing up or obtaining an API key (for non-commercial use), so shoutout to them for providing this free service! Although Open-Meteo offers a Python package, I used curl requests for broader compatibility, as not every site provides a corresponding Python package.

Here’s a sample output from the above weather query example:

-------------------CHAT w/FUNCTION CALLING-----------------

INFO - Initial response: role: "model"

parts {

function_call {

name: "geocoding_function"

args {

fields {

key: "country"

value {

string_value: "CA"

}

}

fields {

key: "city"

value {

string_value: "Toronto"

}

}

}

}

}

Calling geocoding-api

INFO - Function call response: role: "model"

parts {

function_call {

name: "weather_function"

args {

fields {

key: "longitude"

value {

number_value: -79.4163

}

}

fields {

key: "latitude"

value {

number_value: 43.70011

}

}

}

}

}

Calling weather-api

INFO - Function call response: role: "model"

parts {

text: "The weather in Toronto, CA is -7.8 degrees Celsius."

}

The weather in Toronto, Canada is -7.8 degrees Celsius.

INFO - Initial response: role: "model"

parts {

function_call {

name: "geocoding_function"

args {

fields {

key: "country"

value {

string_value: "Brazil"

}

}

fields {

key: "city"

value {

string_value: "Sao Paulo"

}

}

}

}

}

Calling geocoding-api

INFO - Function call response: role: "model"

parts {

function_call {

name: "weather_function"

args {

fields {

key: "longitude"

value {

number_value: -46.63611

}

}

fields {

key: "latitude"

value {

number_value: -23.5475

}

}

}

}

}

Calling weather-api

INFO - Function call response: role: "model"

parts {

text: "The weather in Sao Paulo, Brazil is 25.2 degrees Celsius. n"

}

The weather in Sao Paulo, Brazil is 25.2 degrees Celsius.

Gemini accurately interpreted the prompt and used the appropriate tools to find the answer. Given the city and country, it first recognized the need for latitude and longitude. Using its geocoding function and processing the resulting JSON, Gemini then passed these coordinates to the weather function, ultimately formatting the data into a readable response.

Summary

Function calling is a great technique to allow LLMs like Gemini to interact with real-time data. Application for this is endless: stock markets, travel bookings, movie showtimes, weather, and more. If an API exists, you can build a generative AI application that understands user queries, extracts key components, and retrieves the desired information.

Function Calling with Vertex AI Gemini API was originally published in Google Developer Experts on Medium, where people are continuing the conversation by highlighting and responding to this story.