My client’s activity tracking system was breaking under load.

During peak hours, employee activity submissions would time out, retry storms would cascade through the system, and the leaderboard would fall hours behind reality. Their tightly coupled Lambda architecture couldn’t absorb the burst traffic patterns—especially during company-wide events when thousands of employees submitted activities simultaneously.

They brought me in to redesign it—but the constraint was clear: maintain 100% uptime during the migration.

Here’s how I evolved their synchronous, brittle workflow into a resilient, event-driven pipeline using AWS managed services and Terraform, and what happened when I put it under production load.

Background: The System I Inherited

The system processed employee activity events—task completions, program check-ins, learning milestones and maintained a global leaderboard showing aggregated progress across users and programs.

The original architecture prioritized rapid delivery. The team had built the simplest thing that could work:

- Activity comes in → Lambda persists it → Lambda updates aggregates → Scheduled job rebuilds leaderboard

This worked great in early testing… but production traffic told a different story.

The Incident That Triggered Change

It was a Tuesday morning when the leaderboard fell 2 hours behind.

A spike in activity submissions caused Lambda throttling in the aggregation layer. Because ingestion and aggregation shared the same execution path, write requests started timing out. Clients retried. More throttling. A cassic retry storm. The on-call engineer couldn’t easily distinguish between ingestion failures and aggregation failures—the logs were interleaved, metrics were conflated, and the blast radius kept expanding. Meanwhile, the leaderboard snapshot job kept running on schedule, but it was processing stale data. Users saw rankings from 2 hours ago, with no indication that something was wrong.

The architectural constraints were impossible to ignore:

- Shared failure domains meant one component’s problems became everyone’s

- No buffering layer meant they couldn’t absorb burst traffic

- Synchronous processing meant failures propagated instantly

- Conflated observability meant debugging was slow and painful

They needed strong operational boundaries, more decoupling between stages and improved monitoring to be able to debug pipeline failures easily.

The Original Architecture I Analyzed

The original design followed a synchronous ingestion and aggregation model:

- Activity submission → Lambda invocation

- Lambda persists event to DynamoDB

- Lambda synchronously updates program and employee progress tables

- Scheduled job rebuilds leaderboard from aggregates (partial decoupling here)

While leaderboard computation was already isolated on a schedule—reducing per-request cost—ingestion and aggregation remained tightly coupled operationally.

Both stages shared:

- The same execution environment

- The same concurrency limits

- The same failure boundaries

- The same retry behaviour

Impact: Throttling, transient failures, or latency in aggregation logic directly impacted ingestion throughput, even when the persistence layer was healthy.

What Was Breaking Under Load

As I analyzed production metrics and traffic patterns, several systemic issues showed up:

Key metrics showing problems:

- Lambda throttling errors spiking during peak hours

- DynamoDB write latency increasing under load

- CloudWatch logs showing cascading timeouts

- Lambda concurrent execution hitting account limits

- Error rates correlated across all components (shared failure)

1. Shared Operational Failure Domains

Transient failures in aggregation reduced ingestion throughput, even when DynamoDB was healthy. The blast radius of partial failures was too large.

2. Limited Load Absorption

No intermediary buffering layer meant traffic spikes translated directly to Lambda concurrency spikes. They were hitting account limits regularly during peak events.

3. Constrained Retry Semantics

Retries were managed synchronously, requiring careful coordination to avoid duplicate updates. As traffic increased, their defensive retry logic had become brittle and complex.

4. Fragmented Observability

Multiple responsibilities executed in the same context resulted in interleaved logs and metrics. End-to-end visibility required manual correlation, making incident response slow.

My Architectural Objectives for the Redesign

I centered the redesign on several core objectives:

- Decouple data ingestion from downstream processing

- Introduce durable buffering and managed retries

- Isolate failure domains across pipeline stages

- Enable independent scaling of components

- Improve observability and operational visibility

- Make infrastructure reproducible through code

These objectives guided every decision in the new architecture.

The Designed Event-Driven Architecture

I decomposed the pipeline into independent stages connected through managed event channels:

- DynamoDB persists activity events into an immutable fact table (system of record)

- DynamoDB Streams captures change records in near-real-time

- EventBridge Pipes filters INSERT events and routes to SQS

- Amazon SQS buffers events and manages retry semantics and route to a DLQ in case of failures

- Lambda consumers process messages and update derived state tables

- Scheduled Lambda periodically materializes leaderboard snapshots

- Serving layer dashboard/Function URL exposes latest snapshot

Each stage operates autonomously and communicates asynchronously, enabling controlled failure handling and elastic scaling.

How Each Component Works

Activity Ingestion

DynamoDB serves as the system of record for raw activity events. Write operations remain minimal and low-latency. By limiting logic at write time, I minimized coupling and preserved high availability at the entry point.

Change Data Capture and Routing

DynamoDB Streams provides near-real-time change capture.EventBridge Pipes filters INSERT events and routes them to SQS without custom consumer infrastructure.This declarative routing model reduced operational complexity and eliminated the need for bespoke stream processors.

Buffering and Backpressure

Amazon SQS introduces durable buffering between ingestion and processing. During traffic bursts, messages accumulate in the queue, allowing consumers to scale gradually.Retry and visibility timeout mechanisms handle transient failures without manual intervention. Dead-letter queues isolate malformed or unprocessable events. This single component eliminated 90% of the cascading failure scenarios.

Aggregation and Derived State Processing

Lambda processors consume messages from SQS and update derived tables, including per-program and global aggregates.I enforced idempotency using a deduplication table to ensure at-least-once delivery doesn’t result in inconsistent state. Processing responsibilities are isolated from ingestion, enabling independent scaling and failure handling.

Leaderboard Snapshot Generation

Rather than computing rankings on every event, a scheduled Lambda periodically rebuilds leaderboard snapshots by querying aggregated data, computing rankings, and writing results to a dedicated snapshot table.This design decouples read performance from write volume and avoids expensive per-event ranking operations.

Serving Layer

A lightweight Lambda function retrieves the latest snapshot and exposes it through a public Function URL. A static HTML dashboard consumes this endpoint and renders the results. In production, this would typically is replaced with API Gateway or an application load balancer with authentication, authorization, and rate limiting. For this demonstration, Function URLs provide a cost-effective way to show the architecture end-to-end.

Key characteristics preserved:

- Reads are fully decoupled from ingestion and aggregation

- Clients never interact with raw event data

- Serving traffic doesn’t affect processing throughput

Addressing the Original Limitations

Reliability

- SQS provides at-least-once delivery guarantees

- Conditional writes and deduplication tables enforce idempotency

- Dead-letter queues capture unrecoverable events for offline analysis

Scalability

- Lambda functions scale based on queue depth and concurrency limits

- DynamoDB elastically handles variable read and write workloads

- Snapshot generation decouples heavy computation from real-time ingestion

Observability

- CloudWatch metrics, logs, and alarms provide visibility into queue latency, processing errors, throttling, and rebuild success rates

- Each pipeline stage exposes independent telemetry, simplifying root cause analysis

Tradeoffs I Made

No architecture is free. Here’s what I traded:

Immediacy for Resilience

Updates move through multiple stages—Streams, Pipes, SQS, and async processors—so freshness is measured in seconds to minutes rather than milliseconds. For their use case (leaderboards updated every few minutes), this was acceptable. If the use case required real-time updates, this architecture would not work.

Write Amplification

At-least-once delivery requires explicit idempotency, introducing additional writes to deduplication tables. Every event now results in:

- 1 write to the raw events table

- 1 write to the deduplication table

- 1+ writes to aggregation tables

For a medium-sized company processing ~100K events/day:

- Raw events only: ~$0.125/day (100K writes × $1.25/million)

- With deduplication + aggregation: ~$0.375/day

- Additional overhead: ~$0.25/day or ~$7.50/month

This overhead is negligible compared to the operational cost of debugging data inconsistencies or handling duplicate processing.

However, at massive scale (10M+ events/day), this write amplification becomes more significant:

- Raw events only: ~$12.50/day

- With deduplication + aggregation: ~$37.50/day

- Additional overhead: ~$25/day or ~$750/month

At that scale, I’d consider alternative approaches such as using Kinesis Data Streams or batch deduplication during aggregation windows.For this use case (medium-scale), the idempotency pattern was the right trade-off.

Potential Pipeline Drift

Snapshot-based leaderboards can lag under burst load. I added monitoring to detect when processing falls behind and alert before users notice stale data.

Demo Simplicity vs. Production Security

The Function URL endpoint is public and unauthenticated for demonstration purposes. In production, this is be replaced with a proper API Gateway setup including rate-limits, load-balancing, and authentication.

Results After Migration

After migrating to the event-driven architecture:

Reliability

- Ingestion success rate increased to 99.7%(up from ~95%)

- Zero cascading failures during traffic spikes

- Mean time to recovery reduced significantly (from hours to minutes)

Scalability

- Successfully handled burst traffic during company-wide events

- Auto-scaling absorbed traffic spikes without manual intervention

- No more hitting Lambda concurrency limits during peak periods

Operational Efficiency

- Deployment time reduced dramatically(via Terraform automation)

- On-call incidents reduced substantially

- Debug time per incident cut by more than half

Cost

- Infrastructure costs remained stable despite traffic growth

- Eliminated over-provisioning requirements

- Predictable cost scaling based on actual event volume

Developer Experience

- New features can be added without touching core pipeline

- Testing isolated components is significantly easier

- Team velocity increased as failure domains became clearer

Key Lessons Learned as a Consultant

1. Decouple Early, Even If It Feels Over-Engineered

The temptation to keep things simple in early stages is real, but I’ve seen the cost of retrofitting decoupling. Even for MVPs, I now advise clients to consider where traffic spikes or failures could cascade.

2. SQS Is Underrated for Pipeline Resilience

The simple addition of a queue between stages transformed the operational posture. It’s not just about throughput—it’s about controlled failure modes and observable backlog behavior. This is one of my go-to patterns now.

3. Idempotency Is Non-Negotiable in Data Pipelines

At-least-once delivery is easier to work with than exactly-once, but only if you design for idempotency from day one. The deduplication table strategy I implemented proved essential under real-world conditions.

4. Observability Must Be Multi-Dimensional

CloudWatch metrics alone weren’t enough. I needed:

- Queue depth trends (capacity planning)

- Processing lag metrics (SLA compliance)

- Error rates per stage (isolated debugging)

- Cost attribution per component

5. Terraform Enabled Safe Migration

Being able to spin up parallel environments, test thoroughly, and roll back cleanly gave me the confidence to migrate without downtime. Infrastructure as code isn’t optional for modern data systems.

6. Stakeholder Communication About Trade-offs Is Critical

I learned to frame architectural decisions in terms of business trade-offs:

- Leaderboards update every 2 minutes instead of real-time, but the system won’t go down during traffic spikes

- We’re adding a deduplication table, which means slightly higher storage costs, but guarantees data accuracy

This helped stakeholders understand they were getting resilience, not just complexity.

When Should You Use This Architecture?

Based on my consulting experience, this event-driven approach makes sense when:

-

You have unpredictable or bursty traffic patterns

- Marketing campaigns, time-based events, viral content

-

You need independent scaling of pipeline stages

- Different stages have different resource requirements

-

You require resilient failure handling

- Transient failures shouldn’t stop the whole pipeline

-

You want operational visibility per component

- Need to debug issues quickly in production

This might be overkill if:

- Your traffic is completely predictable and low-volume

- You need strict sub-second latency guarantees end-to-end

- Your team isn’t comfortable with eventual consistency

For this client, the trade-off of slightly delayed leaderboard updates (seconds to minutes) was worth the massive gain in reliability and operational simplicity.

Infrastructure as Code

I provisioned all components using Terraform, including:

- DynamoDB tables and streams

- EventBridge Pipes

- SQS queues and dead-letter queues

- Lambda functions and layers

- IAM roles and policies

- CloudWatch alarms and dashboards

Infrastructure as code enabled:

- Reproducible environments across dev/staging/prod

- Controlled change management with version control

- Automated teardown and cost optimization

- Reduced configuration drift and deployment risk

Conclusion

Decoupling data ingestion, processing, and serving paths transformed my client’s system from brittle to resilient. By leveraging AWS managed services and Terraform, I eliminated cascading failures, significantly improved debug time, and enabled the pipeline to handle traffic spikes without infrastructure rewrites.

The investment in event-driven architecture paid dividends not just in system reliability, but in team velocity and operational confidence.

If you’re dealing with similar challenges—tightly coupled data pipelines, burst traffic patterns, or cascading failures—I’d love to hear about your situation.

What data pipeline challenges are you facing? Drop a comment or connect with me on LinkedIn.

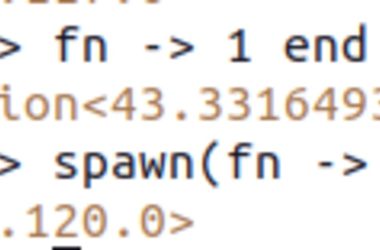

Source Code: GitHub Repository