How to Run MLPerf Llama 2 70B Training on AMD MI325X Without SLURM

This guide covers running the MLPerf Training v5.1 Llama 2 70B LoRA fine-tuning benchmark on a multi-node AMD Instinct MI325X cluster without a SLURM scheduler.

Overview

AMD provides an official MLPerf Training Docker image (rocm/amd-mlperf:llama2_70b_training_5.1) designed primarily for SLURM-managed clusters. However, many environments use simpler SSH-based orchestration. This post demonstrates how to run multi-node distributed training using PyTorch’s rendezvous mechanism.

Hardware Setup

- Cluster: 4× MiTAC MI325X nodes

- GPUs: 8× AMD Instinct MI325X per node (32 total)

- Network: High-speed interconnect for RCCL communication

-

Storage: Shared NFS mount at

/mnt/shared

Prerequisites

Software Dependencies

| Component | Version | Notes |

|---|---|---|

| ROCm | 6.2+ | Host driver and runtime |

| Docker | 24.0+ | With GPU support configured |

| RCCL | Included in container | ROCm Collective Communications Library |

| PyTorch | 2.4+ (ROCm) | Included in container |

Host Setup

-

ROCm Installation: Follow ROCm installation guide for your Linux distribution.

-

Docker GPU Access: Ensure Docker can access AMD GPUs:

docker run --rm --device /dev/dri --device /dev/kfd rocm/pytorch:latest rocm-smi

-

Multi-Node Networking:

- Passwordless SSH between all nodes

- High-speed network interface (InfiniBand/RoCE recommended)

- Shared filesystem accessible from all nodes

-

Pull the MLPerf Container on all nodes:

docker pull rocm/amd-mlperf:llama2_70b_training_5.1

Data Preparation

The benchmark requires ~270GB for the Llama 2 70B model and GovReport dataset. A HuggingFace token with Llama 2 license acceptance is required:

export HF_TOKEN=your_token_here

./finetune_llama.sh prepare

Two Approaches for Multi-Node Training

AMD’s container supports two launch methods:

1. SLURM-Based (AMD Default)

# Requires SLURM scheduler

sbatch run_with_docker_slurm.sh

2. Manual Multi-Node with Rendezvous

For non-SLURM environments, PyTorch’s torchrun supports a rendezvous backend that handles rank assignment automatically:

torchrun

--nnodes=4

--nproc_per_node=8

--rdzv_backend=c10d

--rdzv_endpoint=MASTER_IP:29500

--rdzv_id=mlperf_run

train.py

This command runs identically on all nodes – the c10d backend coordinates rank assignment.

Implementation

Our approach uses SSH to launch training on each node, passing the distributed configuration via environment variables:

Container Launch Pattern

Each node runs:

# Start container with data mounts

docker run --rm --init --detach

--net=host --ipc=host

--device /dev/dri --device /dev/kfd

--name mlperf_llama2sft

-v $DATADIR/data:/data

-v $DATADIR/model:/ckpt

-v $RESULTS:/logs

-v $CODE_DIR:/workspace/code

rocm/amd-mlperf:llama2_70b_training_5.1 sleep infinity

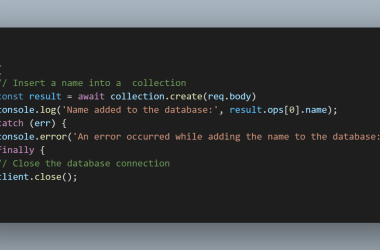

# Execute training with distributed config

docker exec

-e MASTER_ADDR=$MASTER_IP

-e MASTER_PORT=29500

-e SLURM_NNODES=$NUM_NODES

-e SLURM_NODEID=$NODE_RANK

-e NCCL_SOCKET_IFNAME=$NET_IF

mlperf_llama2sft

bash -c 'cd /workspace/code && source config_MI325X_4x8x1.sh && bash ./run_and_time_slurm.sh'

Orchestration Script

The main script SSHs to each node in parallel:

for node_idx in 0 1 2 3; do

ssh node-$node_idx "launch_training.sh $node_idx $NUM_NODES" &

done

wait

Key Configuration

The config_MI325X_4x8x1.sh sets critical parameters:

export DGXNNODES=4

export DGXNGPU=8

export FP8=True

export LR=0.0004

export MBS=1 # micro batch size

export MAX_STEPS=1024

Results

Single Node (8 GPUs)

| Metric | Value |

|---|---|

| Throughput | 2.79 samples/sec |

| Time to Converge | 20.57 minutes |

| Final Loss | 0.921 (target: ≤0.925) |

Four Nodes (32 GPUs)

| Metric | Value |

|---|---|

| Throughput | 11.15 samples/sec |

| Time to Converge | 12.40 minutes |

| Final Loss | 0.924 (target: ≤0.925) |

Scaling Analysis

| Metric | 1-Node | 4-Node | Scaling Factor |

|---|---|---|---|

| GPUs | 8 | 32 | 4× |

| Batch Size | 8 | 32 | 4× |

| Throughput | 2.79 | 11.15 | 4.0× |

Near-linear throughput scaling validates that the network interconnect is not a bottleneck.

Comparison with Official Results

Our single-node result (20.57 min) matches AMD’s official MLPerf v5.1 submission (~21 min) for MI325X, confirming correct configuration.

Key Takeaways

-

Container Design: AMD’s container expects training scripts at

/workspace/code– mount custom configs there rather than extracting files. -

Network Interface: Set

NCCL_SOCKET_IFNAMEto your high-speed network interface for optimal RCCL performance. -

SLURM Variables: The container’s

run_and_time_slurm.shreadsSLURM_NNODESandSLURM_NODEID– these can be set manually for non-SLURM environments. -

Scaling: Expect near-linear throughput scaling on properly configured clusters. Time-to-convergence scaling may differ due to batch size effects on convergence dynamics.

Resources

- AMD MLPerf Training v5.1 Technical Blog

- Reproducing AMD MLPerf Training Results

- ROCm Multi-Node Setup Guide

- PyTorch Distributed Training on AMD GPUs

Full Script

The complete finetune_llama.sh script supports:

- Single and multi-node runs

- Configurable NEXP for MLPerf-compliant submissions

- Automatic config selection based on node count

./finetune_llama.sh run 4 # 4-node, single run

./finetune_llama.sh run 4 10 # 4-node, 10 runs (MLPerf submission)

Interested in the full script? Reach out via LinkedIn and I’ll be happy to share.