In this article, we’ll explore how to build a local legal risk analysis system that doesn’t “hallucinate” text, but instead relies on hard links to the legal framework and case law.

Problem: Why are standard LLMs useless in law?

Standard models (even GPT-4) are prone to hallucinations in article numbers and interpretations. In legal matters, a single digit error is a lost cause. Our goal is to make the LLM not an “author,” but a data manager.

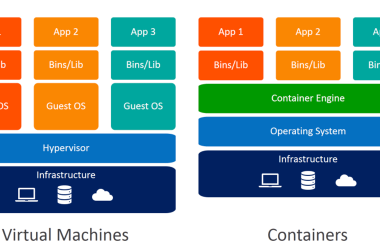

“Killer 2.0” System Architecture

- Data Layer (The Vault)

We don’t rely on model knowledge. We create a Vector DB.

Stack: ChromaDB or FAISS.

Content: Local PDF/JSON files from current databases (Consultant+, Garant).

Process: Texts are broken into chunks and converted into vectors. Each chunk is assigned a unique local ID (link).

- Retrieval Layer

When you submit a document for review, the system doesn’t ask the model “What’s wrong here?”

The system compares the sentence vectors from your document with the vectors from the law database.

Finds the top 5 most relevant articles and precedents.

- Logic Layer (The Dispatcher)

Here we use a lightweight model (e.g., Llama-3-3B or Qwen2-1.5B), compressed to 4-bit to fit into 3 GB of VRAM.

The model’s task: Not to write text, but to populate a JSON table.

Prompt: “Using only the provided law fragments [Context], fill out the table. If there is no direct violation, leave the field blank.”

Mini Assembly Instructions

Step 1: Indexing (Python)

Use LlamaIndex to link the model to your folders.

Python

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

documents = SimpleDirectoryReader(“./laws_russia”).load_data()

index = VectorStoreIndex.from_documents(documents)

Step 2: Generating Tabular Output

Configure the Output Parser so the AI produces structured data with links. Field Description

violation_source Quote from your text

law_ref Link to article ID in the local database

penalty Type of liability (Administrative Code/Criminal Code)

precedent Link to the court case number

Step 3: Claim Generation

The final module takes data from the table and inserts it into pre-prepared legal templates. This eliminates AI “creativity” in official documents.

Why does this work?

Locality: Your documents and l-security code never leave your PC.

Verifibility: Each table row is a clickable link to the original source.

Efficiency: The system runs on a home video card, consuming minimal resources thanks to short responses.