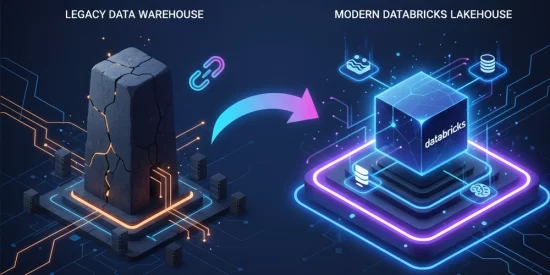

Let’s talk about the dinosaur in your server room. It’s not a fossil, but it might as well be. It’s your legacy data warehouse—that expensive, rigid, on-premises system from Teradata, Netezza, or Oracle that has been the backbone of your business intelligence for the last two decades. For years, it has been a reliable workhorse, but in the age of AI and big data, it has become a major obstacle to innovation.

These legacy systems are notoriously expensive to maintain, struggle to handle the variety and volume of modern data (like video, text, and IoT streams), and lock your data in a proprietary format that is inaccessible to modern machine learning tools. Migrating off these platforms is no longer a question of if, but how. The challenge is that these projects are complex and high-risk. A successful migration doesn’t happen by accident; it requires a proven, methodical approach. This playbook outlines a 4-phase strategy to de-risk the process and ensure you can finally decommission the dinosaur.

Why Migrate? The Compelling Case for the Databricks Lakehouse

Before diving into the “how,” it’s critical to be clear on the “why.” The move from a traditional data warehouse to the Databricks Data Intelligence Platform (built on the lakehouse architecture) is not just a technology swap; it’s a fundamental upgrade in capability that addresses the core limitations of the legacy world.

The 4-Phase Migration Playbook: A Proven Approach

A successful migration is a carefully orchestrated project, not a rushed “lift-and-shift.” This 4-phase playbook provides a structured path to success.

Phase 1: Assess & Discover

This is the critical reconnaissance phase. You cannot migrate what you do not understand.

What to do: The first step is to create a complete inventory of your existing data warehouse environment. This includes all data schemas, tables, views, user roles, and, most importantly, all the ETL (Extract, Transform, Load) pipelines and stored procedures that feed data into and out of the warehouse. Automated discovery tools can significantly accelerate this process.

The Goal: To produce a comprehensive map of your current state and to prioritize workloads for migration. You should identify a low-risk, high-value data mart (e.g., the marketing analytics workload) to serve as the initial pilot project.

Phase 2: Plan & Architect

With a clear understanding of your current state, you can now design the future state.

What to do: This involves architecting your new Databricks environment. You will design the new data ingestion pipelines, decide on your Medallion Architecture (Bronze, Silver, Gold layers), and plan your security and governance framework using tools like Unity Catalog. A key task in this phase is creating a detailed plan for ETL refactoring—deciding how you will rewrite the logic from your old, proprietary ETL tools into modern, scalable Spark jobs on Databricks.

The Goal: To have a complete architectural blueprint and a detailed, phased migration plan before you move a single byte of data.

Phase 3: Execute & Validate

This is the core migration phase where the plan is put into action.

What to do: The migration is typically executed in waves, starting with the pilot workload identified in Phase 1. This involves two parallel streams of work: 1) Data Migration: Physically moving the historical data from the on-premises warehouse to your cloud storage. 2) ETL Migration: Executing the plan to refactor the old data pipelines to run on Databricks. Once the data and pipelines are moved, a rigorous data validation process is required to ensure that the new system is producing the exact same results as the old one.

The Goal: To successfully migrate each workload, validate its correctness, and switch the end-users over to the new Databricks platform.

Phase 4: Optimize & Govern

The journey is not over once the migration is complete. The final phase is about maximizing the value of your new platform.

What to do: Now that your data is on a modern, AI-ready platform, you can begin to explore new use cases, such as building machine learning models. This phase also involves implementing the FinOps best practices discussed in our previous blog to ensure your Databricks environment is cost-optimized and continuously governed.

The Goal: To transition from a migration project to a state of continuous innovation and optimization on your new data intelligence platform.

How Hexaview De-Risks Your Legacy Warehouse Migration

At Hexaview, we are specialists in executing complex, large-scale data warehouse modernization projects. We have a proven, battle-tested methodology that aligns perfectly with this 4-phase playbook. Our team of certified Databricks and cloud architects handles every aspect of the migration, from the initial automated discovery and strategic planning to the complex ETL refactoring and rigorous data validation. We de-risk the entire process, ensuring a seamless, cost-effective, and successful migration that allows you to finally decommission your legacy systems and unlock the full potential of your data for AI and advanced analytics.