In today’s dynamic digital business environment, AI and large language models (LLMs) are transforming how enterprises should operate their daily processes. These tech innovations have become integral to maintaining a competitive edge, from improving CX through intelligent chatbots to automating decision-making. According to the PwC report, AI will contribute up to $15.7 trillion to the global economy by 2030. It is becoming a transformative force across various sectors.

However, as more and more businesses rely on AI and LLM solutions, the complexity of maintaining a security framework is also increasing. These technologies deal with huge amounts of sensitive information, which makes them the prime target of cybercriminals. So the question is, “How can enterprises maintain the security and integrity of their AI-driven operations?” The answer is security Testing. It will allow businesses to proactively identify vulnerabilities and ensure their AI systems operate securely and on a robust framework.

Why are AI and LLM Important for Enterprises?

Before understanding why businesses should invest in AI and LLMs security testing, let us understand their role in enterprise operations. Looking at the current state of the global market, these technologies are trending. Industry-leading experts believe that AI will change the way businesses should operate in the upcoming years. Transforming business operations, improving efficiency, driving innovation, and improving CX are just a few examples among many that display how AI is driving the digital business era. AI solutions not only improve decision-making but also streamline business processes and handle complex data-driven tasks.

Enterprises must inject quality and huge data sets into AI and LLM solutions to generate insights. This will allow them to work on the latest market trends and improve their services and workflows. Let’s take an example of GPT-4, an LLM-based model that transforms how businesses should handle natural language deciphering and creation processes. It is enabling enterprises to upscale the content creation, data analytics, and customer service process by making them appear more human-like. Doing so reduces human workload by automating tasks like email writing, report writing, and documentation. This allows employees to focus on more strategic tasks that add value to business operations. Thus, by integrating these technologies, businesses can achieve higher operational efficiency and customer satisfaction.

Risks Associated with AI and LLM

Although AI and LLM benefit enterprises significantly, they also pose some risks that businesses should effectively manage. One must know that these technologies deal with vast and diverse datasets. So, the chances of data breaches and information misuse will probably be high. Due to this, it would be a serious security concern for businesses. Let’s take a close look at some of the risks involved with AI and LLM implementation in brief:

Data Privacy and Security:

Businesses often have to inject AI and LLM systems with sensitive data. This makes them the prime targets for cyberattacks. They should understand that unauthorized access to these systems can lead to data breaches, which, in turn, will expose their critical business information to cybercriminals. They can misuse that information for their own personal gains, costing millions of dollars to the affected businesses.

Model Manipulation:

Adversarial attacks, such as evasion, data poisoning, Byzantine attacks, and model extraction, can manipulate AI models, causing them to make incorrect predictions or decisions. This can severely affect business, especially finance and healthcare.

Bias and Fairness:

Another significant risk is that AI models can generate biases in the training data, leading to unfair outputs. If businesses train their AI models with biased data sets, it can affect the fairness of automated decisions, negatively impact customer trust, and might lead to legal consequences.

Compliance Issues:

This risk poses a major challenge in AI and LLM implementation. Enterprises must ensure their AI systems comply with data protection laws like GDPR and CCPA. Non-compliance can result in significant fines and reputational damage.

OWASP Top-10 for AI and LLM

The OWASP Top-10 for AI and LLM provides a comprehensive list of AI systems’ most critical security vulnerabilities.

Key vulnerabilities include:

• [OWASP – LLM01] Prompt Injection vulnerability occurs when an attacker manipulates a large language model (LLM) through crafted inputs, causing the LLM to unknowingly execute the attacker’s intentions.

• [OWASP – LLM02] Insecure Output Handling refers specifically to insufficient validation, sanitization, and handling of the outputs generated by large language models before they are passed downstream to other components and systems.

• [OWASP – LLM03] Training Data Poisoning refers to the manipulation of pre-training data or data involved within the fine-tuning or embedding processes to introduce vulnerabilities (which all have unique and sometimes shared attack vectors), backdoors, or biases that could compromise the model’s security, effectiveness, or ethical behavior.

• [OWASP – LLM04] Model Denial-of-Service refers to when an attacker interacts with an LLM in a method that consumes an exceptionally high number of resources, which results in a decline in the quality of service for them and other users, as well as potentially incurring high resource costs.

• [OWASP – LLM05] Supply Chain Vulnerabilities in LLMs can impact the integrity of training data, ML models, and deployment platforms.

• [OWASP – LLM06] Sensitive Information Disclosure vulnerability occurs when LLM applications reveal sensitive information, proprietary algorithms, or other confidential details through their output. This can result in unauthorized access to sensitive data, intellectual property, privacy violations, and other security breaches.

• [OWASP—LLM07] Insecure Plugin Design of LLM plugins can allow a potential attacker to construct a malicious request to the plugin, which could result in a wide range of undesired behaviors, up to and including remote code execution.

• [OWASP – LLM08] Excessive Agency is the vulnerability that enables damaging actions to be performed in response to unexpected/ambiguous outputs from an LLM (regardless of what is causing the LLM to malfunction; be it hallucination/confabulation, direct/indirect prompt injection, malicious plugin, poorly-engineered benign prompts, or just a poorly-performing model).

• [OWASP – LLM09] Overreliance can occur when an LLM produces erroneous information and provides it authoritatively.

• [OWASP – LLM10] Model Theft involves replicating a proprietary AI model using query-based attacks.

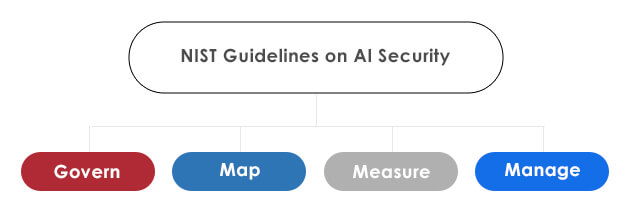

NIST Guidelines on AI Security

To address the above-mentioned risks, the US National Institute of Standards and Technology (NIST) has developed guidelines to assist businesses in enhancing the security of their AI systems, as mentioned in the NIST AI Security Framework 1.0. These guidelines emphasize the need to:

• Govern: A culture of AI Risk Management is cultivated and present.

• Map: Context is recognized, and the risks related to the context are identified.

• Measure: Identified risks are assessed, analyzed, or tracked.

• Manage: Risks are prioritized and acted upon based on a projected impact.

How does Tx Ensures Comprehensive Security Testing for AI and LLM?

At Tx, we understand the critical need for thorough security testing of AI and LLM implementations. Our Red Team Assessment approach holistically evaluates AI systems, identifying and mitigating potential vulnerabilities. Here’s how we ensure maximum coverage and results:

Adversarial Testing

Our team simulates real-world attack scenarios to test the resilience of AI models against adversarial inputs. By mimicking potential threat actors, we identify weaknesses and enhance the robustness of AI systems.

Data Security and Privacy Audits

We conduct rigorous audits to ensure AI systems comply with data protection regulations and best practices. This includes implementing privacy-preserving techniques such as differential privacy and federated learning.

Bias and Fairness Analysis

Our experts analyze AI models for biases and implement corrective measures to ensure fair and ethical outcomes. We use techniques like re-sampling, re-weighting, and adversarial debiasing to mitigate biases in AI systems.

Continuous Monitoring and Incident Response

We set up continuous monitoring systems to detect real-time anomalies and potential security breaches. Our incident response team is always on standby to address any security incidents promptly.

Comprehensive Penetration Testing

Our Cyber Security CoE Team conducts in-depth penetration testing, exploiting potential vulnerabilities in AI and LLM implementations. This helps identify and rectify security gaps before they can be exploited by malicious actors.

Compliance Assurance

We ensure that your AI systems comply with relevant industry standards and regulations. Our team stays updated with the latest compliance requirements to help your business avoid legal and regulatory pitfalls.

Summary

AI and LLM technologies are becoming crucial parts of business operations, and security testing is crucial. At Tx, we utilize our expertise in red team assessment to offer comprehensive security testing strategies for AI and LLM implementation. We ensure your systems are compliant, secure, and resilient against threats. Our teams ensure that your systems comply with guidelines such as NIST and OWASP to enable you to harness the full capabilities of AI while protecting critical assets.

Contact our experts today to learn more about how Tx can help you secure your AI and LLM systems.

The post The Role of Security Testing for AI and LLM Implementations in Enterprises first appeared on TestingXperts.