Just a few months after exploring PaLM APIs (which are now legacy!), it’s time to give Gemini Pro Vision a shoutout!

Introduction

Recently, the Artificial Intelligence field has witnessed an accelerated pace of innovation, with new models being unveiled rapidly. Multimodality, in particular, has become a focal point of discussion this year, illustrating how the integration of diverse data types and input modalities is revolutionizing technology. These multimodal models, which synergize visual, textual, and sometimes auditory data, are leading this transformative wave, showcasing their ability to comprehend and process a vast array of human information more effectively.

In this dynamic landscape, the significance of computer vision models has soared, proving vital across a range of practical applications, from image recognition to real-time video analysis. Their relevance extends to the advancement of more cohesive and accessible intelligent systems.

This tutorial will explore Gemini Pro Vision capabilities — a prime example of a multimodal model that captures the essence of these AI advancements. By combining sophisticated computer vision with natural language processing and additional modalities, it can help in various domains of application.

A prime instance of multimodal models’ utility is Visual Question Answering (VQA), where the model interprets images and text together to respond to inquiries about an image. This function demonstrates the potent combination in multimodal AI, enabling models like Gemini Pro Vision to not only perceive but also analyze and articulate visual data within a meaningful context, greatly enhancing user engagement and accessibility.

What Exactly is VQA?

Visual Question Answering (VQA) is a field of artificial intelligence that combines techniques from computer vision and natural language processing to enable machines to answer questions about images.

In a VQA system, the model is provided with an image and a text-based question about the image. The goal is for the model to analyze the visual content and then generate a relevant, accurate answer based on that analysis.

Here’s how VQA typically works:

- Image Analysis: The model uses computer vision techniques to understand the content and context of the image. This might involve recognizing objects, their attributes, and relationships between them.

- Question Understanding: The model processes the question, often using natural language processing techniques, to understand what is being asked.

- Answer Generation: After analyzing both the image and the question, the model synthesizes the information to produce an answer. This can be in various forms, such as a word, phrase, sentence, or even a number.

Gemini Pro Vision

Gemini 1.0 Pro Vision is a model developed by Google which supports multimodal prompts. You can include text, images, and video in your prompt requests and get text or code responses.

Some technical details from the documentation:

- Last updated: December 2023

- Input: text and images

- Output: text

- Can take multimodal inputs, text and image.

- Can handle zero, one, and few-shot tasks.

- Input token limit: 12288

- Output token limit: 4096

- Model safety: Automatically applied safety settings which are adjustable by developers.

- Rate limit: 60 requests per minute

Those models are improving fast, so this information can get deprecated really soon (as the old PaLM APIs — from few months ago). Actually, the word “old” got a different meaning after this rush of AI.

Getting Started with Gemini Pro Vision

Alright, it’s coding time.

Since we’re discussing images, I took advantage of my full camera roll and revisited my recent trip to Singapore, where I attended a Google event for Developer Creators and Online Communities.

I had the opportunity to visit the National Orchid Garden, where I took numerous pictures to show my mom, as her favorite flower is the orchid. I will use this one, as it also features her favorite color.

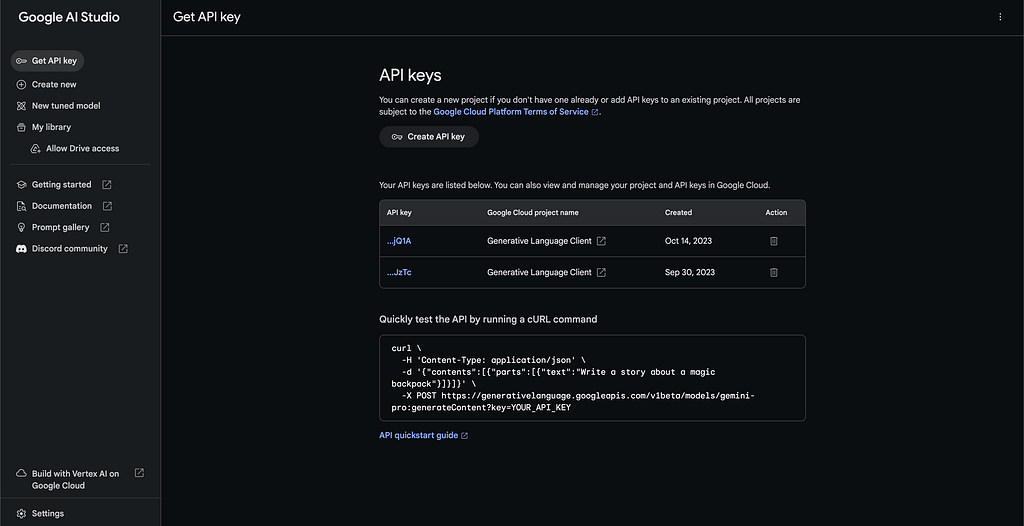

Before diving into the Colab Notebook, where I’ve integrated the step-by-step guide with the code, I want to point out that you’ll need an API key to use the model featured in this tutorial.

To obtain this API key, you should visit https://aistudio.google.com/ and navigate to the “Get API Key” section. The process is quite straightforward!

For reference, here’s the tutorial link:

If you have any questions, feel free to leave a comment in this article.

I hope this helps you understand the potential of these models! 👋

[ML Story] Asking questions to images with Gemini Pro Vision was originally published in Google Developer Experts on Medium, where people are continuing the conversation by highlighting and responding to this story.

![[ml-story]-asking-questions-to-images-with-gemini-pro-vision](https://prodsens.live/wp-content/uploads/2024/04/21906-ml-story-asking-questions-to-images-with-gemini-pro-vision-550x704.jpeg)

![[apr-2025]-ai-community — activity-highlights-and-achievements](https://prodsens.live/wp-content/uploads/2025/05/35201-apr-2025-ai-community-activity-highlights-and-achievements-380x250.jpeg)