Hey there! I am Aritra Roy Gosthipaty, a GDE from India (West Bengal) trying to lower the barrier of Deep Learning with tutorials, blog posts, and various other resources. I call myself a Deep Learning Educator and am currently working at PyImageSearch.

To achieve what I do for a living, I had to choose a platform that is

Simple. Flexible. Poweful. — the exact motto of Keras

In this ML Story, I share my journey into the Deep Learning world with Keras. I am confident I will refer to Keras as a friend rather than a platform, so please bear with me!

Let me introduce you to Keras 👋🏻

My first time looking at Keras code was with Laurence Moroney’s coursera course. To be honest, it was overwhelming at first to find a Sequential model being compiled and fit on a dataset all within 10 lines of code.

# Get the train, val, and test dataset.

train_ds, val_ds, test_ds = load_dataset(...)

# Build a Sequential model.

model = keras.Sequential([...])

# Compile the model.

model.compile(...)

# Train the model.

model.fit(train_ds, validation_data=val_ds, ...)

# Infer on the trained model.

model.evaluate(test_ds)

Being overwhelmed is good! Why? Being overwhelmed triggers one to either stop or look further. I looked further. I knew this would be out of my league, but two years into it, that was the best decision I had ever taken.

My take on Keras 😇

Let me ask you a question.

How would you build a Deep Learning framework that is:

- Simple — enough for anyone to start using the framework from the word go.

- Powerful — enough for anyone to create any Deep Learning model with your framework.

- Flexible — enough for anyone to make customizations and extend your framework.

If this question intrigues you, you’ll find the Keras philosophy fascinating. So let’s delve into it.

Simple

Keras is very welcoming. If you know the theory behind Deep Learning (models, optimizers, loss functions, backpropagation, etc.), using Keras to do things would be a piece of cake.

When you think about a model, you think about it visually. You see your inputs flowing through layers and then computing a prediction, as shown in Figure 1.

Keras helps you map your thoughts to the computer elegantly. Let’s see this in action with a code snippet.

# The input layer

input = keras.Input((32))

# The input flows through the layers

x = keras.layers.Dense(16)(input)

x = keras.layers.Dense(8)(x)

# Compute the output

output = keras.layers.Dense(4, activation="softmax")(x)

# Build the model

model = keras.Model(input, output)

Yep! It is that simple.

When you can visualize the model just with the help of code it tells you something about the framework. The framework was built with a clear focus on user-friendliness, truly embodying the Keras motto — ‘Deep Learning for Humans’.

Powerful

How do you define something as powerful?

I believe, power is felt when you can achieve anything with the tools. That makes the tools powerful.

Figure 2 shows the landing page of Keras examples. The website is a collection of open-source examples written in Keras. The examples are diverse (from Image Classification to NeRFs), simple (understanding DDIMs under 300 lines of code), and, most importantly, thoroughly reviewed (Francois himself takes care of the review process).

This collection of examples is a statement of how powerful Keras is as a framework. Keras offers the flexibility and robustness to build a wide variety of deep learning models.

Flexible

Did you ever face a paradigm shift in your thoughts when you are working on a particular language a lot? Let’s say you use Java in your day to day, it will make you think differently than a Python programmer.

It is the same with platforms. You align with its ideas and structures once you use a platform for too long. Keras, for me, is a platform that does not mold my thinking. It is liberating. It is flexible enough for me to adapt it into anything I want it to be.

Let’s say you like Keras and you are a Computer Vision evangelist like me. Keras is flexible and can be extended into a computer vision first platform. KerasCV is just that. The Keras team also has KerasNLP for all NLP lovers.

Please show us some code 👀

Now that we know how lovely Keras is, let’s focus on My Keras Chronicles. In this section, I jot down my Keras anecdotes. I hope you find it inspiring, and with that, you build things that are way bigger than I can ever imagine!

Involution Neural Networks

GitHub PR: https://github.com/keras-team/keras-io/pull/555

Motivated by the works of Sayak Paul, Aakash Nain, and many more, I sat reading an interesting paper. I read the entire thing and realized I could reimplement it on a minimal scale.

Opening a colab notebook, I started converting the pseudocode provided in the paper to Keras code. It was magical how all the operations were so well documented in the Keras ecosystem.

I was training my model in no time. Now that the model had been trained, I had to further analyse the involution kernels. I cannot (and may never be able to) express how happy I was to see the kernel activation maps (Figure 3).

After I was happy with what I had, I created a pull request to the Keras IO GitHub repository. Soon enough, with a thorough review, this became my first Keras example.

3D volumetric rendering with NeRF

GitHub PR: https://github.com/keras-team/keras-io/pull/576

We (Ritwik Raha and I) often talk about this example.

I was trying to wrap my head around this newly released paper. However, I could use some help. Knowing only Ritwik, who had previously worked with 3D data, I approached him to work on this with me.

There were sleepless nights and also the frustration of not being able to achieve the exact replication of the results. So what you see in Figure 4 took a lot of time.

What was truly rewarding was the positive reception of our work from the community. This also got us our first-ever TFCommunitySpotlight award.

Oh! And did you know that our work was not even submitted to TensorFlow by us? Sayak Paul submitted it and said, “You two deserve this!”.

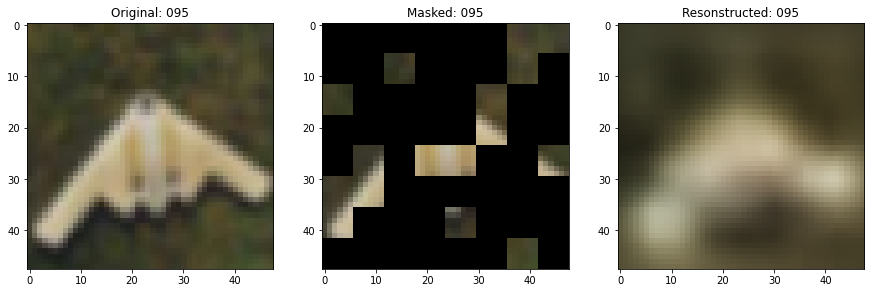

Masked image modeling with Autoencoders

GitHub PR: https://github.com/keras-team/keras-io/pull/715

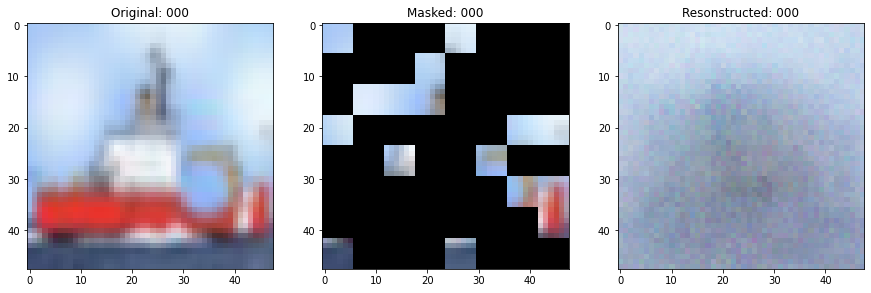

“There is no open-source code for this one, Aritra”, I thought. Given the lucidity of the paper, I felt confident that I could figure out the code on my own. I HAD TO BE EXTRA CAREFUL NOW because I could not refer to the official code.

Enters Sayak Paul: He wholeheartedly agrees to collaborate with me. After all, the first author is none but Kaiming He!

We prototyped the masking mechanism and started training the model as soon as possible. The task was to input a masked image and reconstruct the entire image. Thanks to Keras Callbacks, I built a custom callback that could use the model (which is currently training) to predict reconstructions.

From Epoch 0 (Figure 6) to Epoch 95 (Figure 7), you can see the model slowly but steadily progressing.

We published this blog post (with code) before the authors open-sourced their official implementation.

Investigating Vision Transformer Representations

GitHub PR: https://github.com/keras-team/keras-io/pull/853

“We did it!”

That is Sayak Paul telling me that we cracked the last piece of the puzzle to visualize the activation maps of DINO on a 🦕 (as shown in Figure 8). The problem that we faced was to interpolate the embedding space to take any generic resolution image as input to the DINO model.

Probing ViTs is one of the most thorough pieces of research I have ever done. Sayak Paul and I were journeying to investigate various ViT (Vision Transformer) representations. We had thought of covering four methods which are:

- Method I: Mean attention distance

- Method II: Attention Rollout

- Method III: Attention heatmaps

- Method IV: Visualizing the learned projection filters

The amount of knowledge I gained working on this project cannot be quantified. I would always recommend collaborating on a project, as it’s a great way to learn and enjoy the process.

I am glad I got to work with you, Sayak Da (if you are reading this).

A note of thanks

As I reflect on my journey, I’m filled with immense gratitude for the individuals and experiences that have shaped my path in Deep Learning.

First, a massive thank you to Francois Chollet for creating Keras. Keras has transformed my thoughts into code and models, and for that, I am eternally grateful.

To Sayak Paul, your guidance and mentorship have been invaluable. I wouldn’t be where I am today without you.

To my close friends — Ayush, Devjyoti, and Snehangshu, thank you for embarking on this Deep Learning journey with me. Those initial explorations with Keras were the spark that ignited my interest and set me on this path.

A special shoutout to Ritwik Raha. Your partnership in many of our Keras blog posts has been a significant part of this journey. Our shared late nights, ideas, and the joy of accomplishment have enriched my experiences in ways I could not have imagined.

To Sohini, thank you for your critical eye and invaluable insights in reviewing the first draft.

Last but not least, I would like to thank Hee Jung for selecting me as this month’s ML Story author.

I am sincerely grateful and look forward to many more years of learning, exploration, and shared success in the world of Deep Learning.

[ML Story] My Keras Chronicles was originally published in Google Developer Experts on Medium, where people are continuing the conversation by highlighting and responding to this story.

![[ml-story]-my-keras-chronicles](https://prodsens.live/wp-content/uploads/2023/05/11411-ml-story-my-keras-chronicles-550x413.jpeg)